- Sampling Techniques

Understanding Sampling Techniques in Experimental Research: A Comprehensive Guide

- August 6, 2024

Experimental research is a crucial aspect of scientific investigation, allowing researchers to test hypotheses and draw conclusions about various phenomena. However, the success of experimental research depends heavily on the quality of the sampling technique used. Sampling techniques refer to the methods used to select participants or observations for a study. In this comprehensive guide, we will explore the various sampling techniques used in experimental research, their advantages and disadvantages, and how to choose the right sampling technique for your study. By understanding the principles of sampling techniques, you can ensure that your experimental research is valid, reliable, and provides meaningful insights. So, let’s dive in and explore the world of sampling techniques in experimental research!

Importance of Sampling Techniques in Experimental Research

Definition of sampling techniques.

Sampling techniques refer to the methods used to select a subset of individuals or units from a larger population for the purpose of research. These techniques are crucial in experimental research as they determine the representativeness and generalizability of the findings to the larger population.

Sampling techniques can be broadly classified into two categories: probability sampling and non-probability sampling.

Probability sampling involves selecting samples based on known probabilities or random selection from the population. Examples of probability sampling techniques include simple random sampling, stratified random sampling, and cluster sampling.

Non-probability sampling involves selecting samples based on non-random criteria, such as convenience or purposeful sampling. Examples of non-probability sampling techniques include snowball sampling, quota sampling, and purposive sampling.

The choice of sampling technique depends on the research question, the size and characteristics of the population, and the resources available for the study. The sampling technique should be representative of the population to ensure that the findings can be generalized to the larger population.

The Significance of Proper Sampling in Experimental Research

Proper sampling is a critical aspect of experimental research, as it determines the representativeness and generalizability of the findings. The significance of proper sampling lies in its ability to ensure that the sample accurately reflects the population of interest, thereby minimizing bias and maximizing the validity of the results. In addition, proper sampling techniques help researchers to draw meaningful conclusions and generalize their findings to the larger population.

Types of Sampling Techniques

Random sampling.

Random sampling is a technique used in experimental research to select participants or samples from a population in a way that ensures a representative and unbiased sample. In this method, every member of the population has an equal chance of being selected, and the selection is made using a randomization process. This process can be done using various methods such as random number generators, tables of random numbers, or algorithms.

Random sampling is widely used in experimental research as it helps to eliminate selection bias, which occurs when the sample is not representative of the population. This technique is also efficient as it allows for a larger sample size to be drawn from a larger population. Additionally, it ensures that the sample is a fair representation of the population, and the results obtained can be generalized to the population.

There are several types of random sampling techniques, including simple random sampling, stratified random sampling, and cluster sampling. Simple random sampling involves selecting participants or samples randomly from the population. Stratified random sampling involves dividing the population into groups or strata and then selecting participants or samples randomly from each stratum. Cluster sampling involves dividing the population into clusters and then selecting clusters randomly for inclusion in the sample.

In conclusion, random sampling is a widely used technique in experimental research as it ensures a representative and unbiased sample. It eliminates selection bias and allows for a larger sample size to be drawn from a larger population. Additionally, there are several types of random sampling techniques that can be used depending on the research design and sample size.

Stratified Sampling

Stratified sampling is a type of sampling technique used in experimental research to ensure that the sample is representative of the population . This technique involves dividing the population into smaller groups or strata based on specific characteristics and then selecting a sample from each stratum.

The process of stratified sampling involves the following steps:

- Identify the population: The first step is to identify the population that you want to study. This population can be defined by demographic characteristics such as age, gender, ethnicity, or geographic location.

- Define the strata: Once the population has been identified, the next step is to define the strata or subgroups within the population. These strata are defined based on specific characteristics that are relevant to the research question.

- Select the sample: After defining the strata, a sample is selected from each stratum. The sample size for each stratum is determined based on the research question and the desired level of precision.

- Ensure adequate representation: Stratified sampling ensures that each stratum is adequately represented in the sample. This means that the sample should reflect the characteristics of the population in each stratum.

Stratified sampling is particularly useful when the population is heterogeneous and the research question requires a representative sample. It is also useful when the researcher wants to ensure that the sample is proportionate to the population.

However, stratified sampling can be time-consuming and resource-intensive, particularly when the population is large and complex. Additionally, the researcher must have a clear understanding of the population and the relevant characteristics to define the strata effectively.

Overall, stratified sampling is a useful sampling technique in experimental research that ensures a representative sample and helps to minimize bias.

Cluster Sampling

Cluster sampling is a technique that involves dividing a population into smaller groups or clusters and selecting a subset of these clusters for study. This method is particularly useful when it is difficult or expensive to access the entire population. The selection of clusters is random, and each cluster is treated as a single unit.

Here are some key points to consider when using cluster sampling:

- Advantages: Cluster sampling can be more efficient and cost-effective than other sampling methods, especially when studying large populations. It also allows for the study of groups that may be difficult to access individually.

- Disadvantages: The main disadvantage of cluster sampling is that it may not be as representative of the entire population as other sampling methods. Additionally, there may be variability within clusters that can affect the results of the study.

- Considerations: When using cluster sampling, it is important to ensure that the clusters are selected randomly and that the sample size is sufficient to produce meaningful results. Additionally, it is important to consider the size and homogeneity of the clusters and to account for any variability within them.

Overall, cluster sampling can be a useful sampling technique in experimental research, but it is important to carefully consider its advantages and disadvantages and to select the appropriate sampling method based on the research question and population being studied.

Convenience Sampling

Convenience sampling is a non-probability sampling technique that involves selecting participants based on their availability and accessibility. This method is often used when the population is difficult to identify or when time and resources are limited. The main advantage of convenience sampling is its speed and ease of implementation. However, the main disadvantage is that the sample may not be representative of the population, which can lead to biased results.

To ensure that the sample is as representative as possible, researchers should take steps to ensure that the sample is diverse and includes individuals from different backgrounds and demographics. This can be achieved by recruiting participants from different locations, using multiple sources to recruit participants, and actively seeking out underrepresented groups. Additionally, researchers should aim to collect a sufficient sample size to ensure that the results are reliable and accurate.

It is important to note that convenience sampling should only be used when other sampling techniques are not feasible or practical. Researchers should carefully consider the advantages and disadvantages of this method before deciding to use it. In general, convenience sampling is best suited for exploratory research or pilot studies, where the aim is to gather preliminary data to inform future research.

Snowball Sampling

Snowball sampling is a non-probability sampling technique that is often used in studies where the population is difficult to identify or recruit. It is particularly useful in studying hard-to-reach populations or those that are sensitive to researchers. The method involves recruiting a small number of initial participants and then asking them to recruit additional participants who fit the study criteria. This process continues until a sufficient sample size is reached.

Snowball sampling has several advantages over other sampling techniques. It is often more efficient and cost-effective than other methods, as it relies on the existing social networks of the initial participants to recruit additional participants. It also allows for a more diverse sample, as participants are not limited by pre-defined criteria and can recruit others who may not have otherwise been included in the study.

However, snowball sampling also has some limitations. It is not a random sampling technique, so there is a risk of bias in the sample. Additionally, the process of recruiting participants can be time-consuming and may require a significant amount of effort from the researcher. It is important to carefully consider the potential benefits and limitations of snowball sampling before deciding to use it in a study.

Sampling Techniques in Clinical Research

Clinical research involves the study of drugs, devices, and biologics in human subjects to determine their safety and efficacy. In clinical research, sampling techniques play a crucial role in selecting the right participants for the study. Here are some commonly used sampling techniques in clinical research:

Randomization

Randomization is a sampling technique used to assign participants to different treatment groups. Participants are randomly selected from the eligible population and assigned to different treatment groups using a predetermined algorithm. Randomization helps to reduce bias and ensure that each treatment group is comparable.

Stratified Randomization

Stratified randomization is a variation of randomization in which participants are divided into strata based on certain characteristics, such as age, gender, or disease severity. Participants within each stratum are then randomly assigned to treatment groups. Stratified randomization helps to ensure that each treatment group has a similar distribution of the stratifying factors.

Convenience sampling is a non-probability sampling technique in which participants are selected based on their availability and accessibility. Participants are typically recruited from hospitals, clinics, or other healthcare facilities. Convenience sampling is often used when it is difficult or expensive to recruit a representative sample from the general population.

Purposive Sampling

Purposive sampling is a non-probability sampling technique in which participants are selected based on specific criteria or characteristics. Participants are typically recruited based on their knowledge, experience, or expertise related to the research topic. Purposive sampling is often used in qualitative research to obtain in-depth insights from experts or stakeholders.

Cluster sampling is a sampling technique in which participants are selected from clusters or groups rather than from the entire population. Participants within each cluster are then randomly selected for the study. Cluster sampling is often used in clinical research when it is difficult or impractical to recruit participants from the entire population.

Overall, sampling techniques in clinical research play a critical role in ensuring the validity and reliability of study results. Researchers must carefully consider the appropriate sampling technique based on the research question, study design, and population characteristics.

Factors to Consider When Selecting Sampling Techniques

Sample size.

The sample size is a crucial factor to consider when selecting a sampling technique in experimental research. It refers to the number of participants or observations that will be included in the study. The sample size determines the statistical power of the study, which is the probability of detecting a true effect if it exists. A larger sample size increases the statistical power of the study, making it more likely to detect a true effect, even if it is small.

Importance of Sample Size

The sample size is important for several reasons. First, it affects the precision and accuracy of the results. A larger sample size increases the precision of the results, making them more reliable. Second, it affects the statistical power of the study, which determines the likelihood of detecting a true effect. A smaller sample size decreases the statistical power of the study, making it less likely to detect a true effect, even if it is large. Third, it affects the generalizability of the results, as a larger sample size increases the likelihood that the results will be representative of the population.

Determining Sample Size

The sample size should be determined based on several factors, including the research question, the level of precision required, the expected effect size, and the variability of the data. A power analysis can be used to determine the appropriate sample size for the study. A power analysis considers the research question, the expected effect size, the variability of the data, and the level of precision required to determine the appropriate sample size.

Implications of Sample Size

The sample size has several implications for the study design and data analysis. A larger sample size may require more resources, such as time and money, to collect and analyze the data. A smaller sample size may require a larger effect size to be detected, making it more difficult to detect a true effect. Additionally, a smaller sample size may require a larger variability in the data to detect a true effect, making it more difficult to detect a true effect.

Overall, the sample size is a critical factor to consider when selecting a sampling technique in experimental research. It affects the precision, accuracy, and generalizability of the results, and should be determined based on several factors, including the research question, the level of precision required, the expected effect size, and the variability of the data.

Diversity and Inclusion

When selecting a sampling technique, it is important to consider diversity and inclusion. Diversity refers to the representation of different groups within the sample, while inclusion refers to the extent to which the sample reflects the population of interest. Both diversity and inclusion are important to ensure that the sample accurately represents the population being studied and to avoid bias in the results.

There are several strategies that can be used to increase diversity and inclusion in the sample. One approach is to use random sampling techniques, such as simple random sampling or stratified random sampling, to ensure that the sample is representative of the population . Another approach is to oversample certain groups, such as underrepresented populations, to ensure that they are adequately represented in the sample.

It is also important to consider the potential for self-selection bias when using certain sampling techniques, such as convenience sampling or snowball sampling. Self-selection bias occurs when individuals who are more likely to have certain characteristics or opinions are more likely to participate in the study, leading to biased results. To mitigate this bias, researchers can use methods such as random assignment or controlled recruitment to ensure that the sample is representative of the population .

In addition to diversity and inclusion, researchers should also consider other factors when selecting a sampling technique, such as cost, time, and the nature of the research question. By carefully selecting the appropriate sampling technique, researchers can ensure that their study produces valid and reliable results.

Cost and Time Constraints

When it comes to selecting sampling techniques, it is important to consider the costs and time constraints associated with each method. In some cases, certain sampling techniques may be more expensive or time-consuming than others, which can have a significant impact on the overall feasibility of a research project.

One factor to consider is the cost of data collection. For example, some sampling techniques may require specialized equipment or software that can be expensive to obtain or maintain. Additionally, some methods may require a larger sample size in order to be statistically valid, which can increase the cost of the study.

Another factor to consider is the time required to conduct the study. Some sampling techniques may be faster to implement than others, which can be important if a researcher is working with a tight deadline. However, it is important to note that some methods may require more time for data analysis and interpretation, which can impact the overall timeline of the study.

It is important to carefully weigh the costs and time constraints associated with each sampling technique in order to select the most appropriate method for a given research project. By considering these factors, researchers can ensure that they are able to conduct high-quality studies that are both feasible and practical.

Ethical Considerations

When selecting sampling techniques, it is important to consider ethical considerations. These considerations are essential to ensure that the research process is conducted in a manner that is respectful of human rights and dignity.

- Informed Consent: Informed consent is a crucial ethical consideration in experimental research. It involves obtaining permission from participants before they take part in the study. Participants should be provided with all relevant information about the study, including its purpose, procedures, risks, benefits, and confidentiality measures.

- Voluntary Participation: Participation in experimental research should be voluntary, and participants should be free to withdraw from the study at any time without any negative consequences.

- Deception: Deception is a common ethical issue in experimental research. It occurs when participants are misled or deceived about the nature or purpose of the study. Researchers should avoid deception and if it is necessary, they should take steps to minimize harm and provide appropriate debriefing after the study.

- Risk of Harm: Experimental research may involve some risks of harm to participants, such as physical or psychological harm. Researchers should take all necessary precautions to minimize the risk of harm and provide appropriate care if harm occurs.

- Confidentiality: Confidentiality is an essential ethical consideration in experimental research. Researchers should ensure that participants’ personal information is kept confidential and only used for the intended purpose of the study.

- Fairness: Experimental research should be conducted in a fair manner. Participants should be selected randomly or based on specific criteria that are relevant to the study. Researchers should avoid any form of discrimination or bias in the selection process.

In summary, ethical considerations are crucial in experimental research. Researchers should obtain informed consent, ensure voluntary participation, avoid deception, minimize the risk of harm, maintain confidentiality, and conduct the study in a fair manner.

Sampling Techniques in Practice

Case study: random sampling in a psychology experiment.

Random sampling is a widely used technique in experimental research, particularly in psychology. It involves selecting participants from a population in a way that ensures that each participant has an equal chance of being selected. In this section, we will examine a case study that demonstrates the use of random sampling in a psychology experiment.

Participants

In this case study, the researcher selected 100 participants from a pool of undergraduate students at a large university. The researcher used a random number generator to select the participants, ensuring that each participant had an equal chance of being selected.

The researcher designed an experiment to investigate the effects of stress on memory performance. The experiment consisted of two phases: a stress induction phase and a memory recall phase.

During the stress induction phase, the participants were asked to give a brief impromptu speech in front of a video camera. This was designed to induce stress in the participants. The participants were then randomly assigned to one of two groups: a stress group or a control group. The stress group was asked to solve a difficult math problem, while the control group was asked to solve an easy math problem.

During the memory recall phase, the participants were asked to recall as many words as they could from a list of 20 words. The researcher measured the number of words recalled by each participant and compared the results between the stress group and the control group.

Data Analysis

The researcher analyzed the data using statistical tests to determine whether there was a significant difference in memory recall between the stress group and the control group. The results showed that the stress group recalled significantly fewer words than the control group.

This case study demonstrates the use of random sampling in a psychology experiment. The researcher used random sampling to select participants from a population and ensured that each participant had an equal chance of being selected. The results of the experiment suggest that stress can have a negative impact on memory performance.

Random sampling is a useful technique in experimental research as it ensures that the sample is representative of the population and reduces the risk of bias. However, it is important to ensure that the sample size is large enough to provide accurate results and that the participants are selected using a fair and unbiased method.

Case Study: Stratified Sampling in a Public Health Study

In this case study, we will explore the use of stratified sampling in a public health study. Stratified sampling is a technique where the population is divided into subgroups or strata based on specific criteria, and then a random sample is drawn from each stratum. This method is particularly useful when the researcher wants to ensure that the sample is representative of the population and that each stratum is proportionally represented in the sample.

Objectives of the Public Health Study

The primary objective of this public health study was to investigate the prevalence of a particular disease in a specific population and identify any potential risk factors associated with the disease.

Population and Sampling Frame

The population of interest in this study was the adult population living in a specific geographic area. The sampling frame was a list of all adults living in the area, which was obtained from the local government.

Stratification Criteria

The population was stratified based on age, gender, and socioeconomic status. The rationale behind this stratification was to ensure that the sample was representative of the population in terms of these key demographic factors.

Sampling Procedure

A random sample of 1000 adults was drawn from the sampling frame. The sample was stratified based on the three criteria mentioned above, and a random sample of 100 adults was drawn from each stratum.

Data Collection and Analysis

Data was collected through a combination of self-reported surveys and medical examinations. The data was analyzed using statistical software to identify any patterns or associations between the disease and the stratified factors.

Case Study: Cluster Sampling in a Sociology Study

Cluster sampling is a technique that involves dividing a population into smaller groups or clusters and selecting a sample from each cluster. This method is often used in sociology studies to examine social phenomena at the community level. In this case study, we will explore how cluster sampling was used in a sociology study to investigate the impact of a community-based program on crime rates.

Study Design

The study was a quasi-experimental design, where the researchers compared crime rates in a community that received a community-based program aimed at reducing crime with a control community that did not receive the program. The study used cluster sampling to select the communities for the study.

The study used a two-stage sampling process. In the first stage, the researchers identified 20 clusters of census tracts based on their crime rates. Each cluster consisted of contiguous census tracts with similar crime rates. In the second stage, the researchers randomly selected one community from each cluster to participate in the study. The final sample consisted of 10 communities, with five in the treatment group and five in the control group.

Advantages and Disadvantages

Cluster sampling has several advantages, including the ability to collect data from large populations, reduce costs, and increase the generalizability of the findings. However, cluster sampling also has some disadvantages, such as the potential for selection bias and the loss of within-cluster variation.

In this study, the researchers used cluster sampling to address the issue of limited resources and time, as it allowed them to collect data from a large number of communities with limited resources. However, the study was also subject to selection bias, as the researchers chose communities based on their crime rates, which could have influenced the results. Additionally, the use of clusters may have led to a loss of within-cluster variation, as the researchers may have missed important differences between communities within the same cluster.

Cluster sampling is a useful technique in sociology studies when the population is large and diverse, and resources are limited. However, researchers must be aware of the potential for selection bias and the loss of within-cluster variation when using this method. In this case study, the researchers used cluster sampling to investigate the impact of a community-based program on crime rates, but they were subject to selection bias and the loss of within-cluster variation.

Best Practices for Sampling Techniques

1. defining the study population.

Before selecting a sample, it is crucial to define the study population. This involves identifying the individuals or units that meet the criteria for inclusion in the study. For instance, if the study is focused on college students, the study population would include all the college students who meet the inclusion criteria. Defining the study population helps to ensure that the sample is representative of the population of interest.

2. Determining Sample Size

Another best practice is to determine the appropriate sample size for the study. Sample size determination involves estimating the number of participants needed to achieve the desired level of statistical power and precision. Researchers can use sample size calculators or consult statistical experts to determine the appropriate sample size. It is important to note that underpowered samples can lead to incorrect conclusions, while overpowered samples can result in wasted resources.

3. Randomization

Randomization is a critical aspect of sampling techniques in experimental research. It involves assigning participants to treatment groups randomly to minimize selection bias. Randomization can be achieved using various methods, such as simple random sampling, stratified random sampling, or blocked random sampling. Randomization ensures that each participant has an equal chance of being assigned to a particular treatment group, thereby reducing the impact of selection bias.

4. Control of Confounding Variables

Sampling techniques in experimental research should also control for confounding variables. Confounding variables are factors that can influence the outcome of the study and may cause misleading results. Researchers should take steps to control for confounding variables by matching participants or adjusting for potential confounders in the analysis. Failure to control for confounding variables can lead to biased results and incorrect conclusions.

5. Replication and Replication Bias

Finally, best practices for sampling techniques in experimental research should address the issue of replication and replication bias. Replication refers to the process of repeating a study to confirm the results. Replication bias occurs when researchers only report positive findings or selectively publish studies that support their hypotheses. To avoid replication bias, researchers should aim to publish all their findings, regardless of whether they are positive or negative. Replication studies can also help to confirm the validity of previous findings and reduce the impact of sampling bias.

Limitations and Future Directions

Despite the numerous advantages of sampling techniques in experimental research, there are limitations that researchers should be aware of when planning and conducting studies. Moreover, there are areas for future exploration to further enhance the accuracy and validity of experimental findings.

- Sampling Errors: Sampling errors occur when the sample does not accurately represent the population of interest. This can lead to biased results and incorrect conclusions. Researchers should ensure that their sampling technique is appropriate for the research question and population of interest.

- Generalizability: The generalizability of experimental findings depends on the representativeness of the sample. If the sample is not representative of the population, the results may not be generalizable to other settings or groups. Future research should focus on developing more inclusive sampling techniques to improve generalizability.

- Sample Size: The sample size is an important consideration in experimental research. Small samples may not provide sufficient statistical power to detect significant effects, while large samples may be impractical or expensive to obtain. Researchers should consider the trade-offs between sample size and other factors, such as cost and time.

- Non-Response Bias: Non-response bias occurs when non-responders differ systematically from responders. This can lead to biased results and incorrect conclusions. Future research should explore methods to reduce non-response bias, such as incentives for participation or follow-up strategies to encourage response.

- Technological Advancements: Technological advancements offer new opportunities for sampling techniques in experimental research . For example, online surveys and social media platforms provide new avenues for recruiting diverse and representative samples. Future research should explore the potential of these new technologies to improve sampling techniques and enhance experimental validity.

Overall, while sampling techniques have proven to be an essential component of experimental research, it is important to be aware of their limitations and potential biases. Future research should focus on developing new and innovative sampling techniques to overcome these limitations and improve the accuracy and validity of experimental findings.

Resources for Further Learning

If you are interested in learning more about sampling techniques in experimental research , there are a variety of resources available to you. Some useful places to start include:

- “Experimental Design and Analysis: An Introduction” by David Blaxter

- “The Practice of Statistics in the Sciences” by Geoff Cumming and Chris Wallace

- “Design of Experiments: A Practical Perspective” by Richard J. St. Anne

Online Courses

- “Sampling and Sample Size Calculations for Clinical Research” offered by the University of Florida

- “Experimental Design and Analysis” offered by the University of Illinois at Urbana-Champaign

- “Introduction to Statistical Methods for Clinical Research” offered by the University of California, San Diego

- The Statistical Methods for Practice website ( https://www.stats4stem.com/ ) offers a variety of resources for learning about experimental design and sampling techniques.

- The Experimental Design webpage ( https://www.ncsu.edu/statistics/examples/experimental-design/ ) provides an overview of the different types of experimental designs and sampling techniques.

By taking advantage of these resources, you can deepen your understanding of sampling techniques in experimental research and improve your ability to design and analyze experiments.

1. What is sampling in experimental research?

Sampling is the process of selecting a subset of individuals or cases from a larger population for the purpose of studying particular characteristics or behaviors. It is an essential part of experimental research as it helps to ensure that the results obtained are representative of the population being studied.

2. What are the different types of sampling techniques in experimental research?

There are several types of sampling techniques used in experimental research, including random sampling, stratified sampling, cluster sampling, and oversampling/undersampling. Each technique has its own advantages and disadvantages, and the choice of technique depends on the research question, sample size, and population characteristics.

3. What is random sampling in experimental research?

Random sampling is a technique where every individual or case in the population has an equal chance of being selected for the sample. It is considered the most representative and unbiased sampling technique, as it ensures that the sample is a true reflection of the population.

4. What is stratified sampling in experimental research?

Stratified sampling is a technique where the population is divided into smaller groups or strata based on certain characteristics, and a sample is then selected from each stratum. This technique is useful when the population is heterogeneous and the researcher wants to ensure that the sample is representative of each stratum.

5. What is cluster sampling in experimental research?

Cluster sampling is a technique where groups or clusters of individuals or cases are selected for the sample, rather than individuals or cases being selected randomly. This technique is useful when it is difficult or expensive to reach all individuals or cases in the population.

6. What is oversampling/undersampling in experimental research?

Oversampling and undersampling are techniques where the sample size is increased or decreased, respectively, to ensure that certain groups or characteristics are adequately represented in the sample. These techniques are useful when the population is imbalanced or when certain groups or characteristics are underrepresented in the population.

7. How do sampling techniques affect experimental research results?

Sampling techniques can have a significant impact on the results of experimental research. If the sample is not representative of the population, the results may not be generalizable to the population of interest. Therefore, it is essential to carefully consider the sampling technique and ensure that it is appropriate for the research question and population characteristics.

How to Choose a Sampling Technique for Research | Sampling Methods in Research Methodology

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Related Articles

Understanding the Role of Sampling Techniques in Research Studies

Understanding the 5 Sampling Techniques and Their Meanings in Research

Understanding the Four Sampling Techniques Used in Research Studies

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

5: Experimental Design

- Last updated

- Save as PDF

- Page ID 45046

- Michael R Dohm

- Chaminade University

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Introduction

During this course, you will learn about statistics, yes, but my hope and goal for your experience in this class is much more than that: statistical reasoning . To have statistical reasoning skills, you need to be comfortable with the context of how data are acquired, i.e., data acquisition . It’s not just about knowing how an instrument performs, e.g., under or over the limits of quantification characteristics of the instrument lead to censored missing values , missing not at random (MNAR). In a broad stoke view, data are obtained from two kinds of studies: observational studies and experimental (manipulative) studies . what a correctly designed experiment can tell you about the world, and how a poorly designed experiment works against you. At the end of the semester, you should be familiar with the issues of Randomization, Control, Independence, and Replication.

The basics explained

Experimental design is a discipline within statistics concerned with the analysis and design of experiments. Design is intended to help research create experiments such that cause and effect can be established from tests of the hypothesis. We introduced elements of experimental design in Chapter 2.4 . Here, we expand our discussion of experimental design.

We begin our discussion with review of definitions and an outline of an important concept in statistics. First, we need to be clear about why we measure or conduct experiments. We do so to collect data (datum is the singular) about a characteristic or trait from a population. Data are observations: they include observations and measurements from instruments. At their best, data are the “facts” of science.

Measurement, Observation, Variables, Values

Measurement is how we as scientists acquire our data ( Chapter 3.4 ). The process of measuring involves the assignment of numbers, codes, or labels to observations according to rules established prior to any data collection (Stevens 1946, Houle et al 2011). Observations refer to the units of measurement, whereas variables are the characteristics or traits that are measured. A value refers to the particular number, score, or label assigned to a particular sample for a variable. Variables are generic, the thing being measured, values are specific to the subject or sample being measured for the variable. Each discipline in biology has its own set of variables and samples may or may not have different values for each variable measured. Variables are summarized as a statistic (e.g., the sample mean), which is a number taken to estimate a parameter , which pertains to the population. Variables and parameters in statistics were discussed in Chapter 3.4 . Because numbers or scores or labels can be assigned according to different rules, this means that variables may be measured on different kinds of scales or data types. The different kinds of data types were presented in Chapter 3.1 .

Missing values

A missing value refers to lack of a value for an observation or variable. Missing values can affect analysis and many R algorithms are sensitive or may fail to run in the presence of missing values. Censored values include observations for which only partial information is available. Missing data may be of three kinds, and one of them, missing not at random (MNAR), can influence the analysis. MNAR implies some observations are missing because of a systematic bias . Instrument limits of quantification are an example of systematic bias — for example, spectrophotometric absorbance readings of zero for a colorimetric assay (e.g., Bradford protein assay) may not represent complete absence of the target, but rather, the lower detection limits of the instrument or the assay method (0.1 mg protein/mL in this case).

The other kinds of missing values are missing completely at random (MCAR) and missing at random (MAR). MCAR implies there is no association between any element of the experiment and the absence of a value. Analysis on MCAR data sets may support unbiased conclusions. MAR includes the random errors that occur during data acquisition: date may be lost because of operator error. Analysis of MAR data sets, as with MCAR data sets, may still result in unbiased conclusions; obviously, the size of the data set influences whether this claim holds. In some cases, missing values can be replaced by imputed values .

Cause & Effect

Observations or measurements gathered under controlled conditions (experiments) are essential if we are to answer questions about populations, to separate cause and effect , where one or more events is directly the result of another factor, as opposed to anecdote , a story about an event which, by itself, cannot be used to distinguish cause from association (e.g., spurious correlations, see Ch 16.2 ). Recall that anecdotal evidence typically comes from personal experience, where observations may be obtained by non-systematic methods. Well-designed experiments, in the classical sense, permit discrimination among competing hypotheses in large part because observations are collected according to strict rules. Well-developed hypotheses tested by well-designed experiments permit ruling out alternative explanations (sensu Pratt [1964] strong inference ). Observational studies, or epidemiology studies if we are talking about investigations of risk assessment, also may contribute to discussions of cause and effect (see history of smoking by Doll 1998).

Medicine is replete with stories about how a patient showed a particular set of symptoms, and how a physician applied a set of diagnostic protocols. An outcome was achieved, and the physician reports the outcome and circumstances related to the patient to her colleagues. This is an example of a case study , and the focus of investigation is the individual, the patient. The doctor’s report will sound like, “I tried the standard treatment given the diagnosis, and the patient’s symptoms diminished, but later returned. I tried a higher dose, but the patient’s symptoms persisted unabated.” No inferences are made to a wider set of individuals and the report is anecdotal. If observations are made on several patients, this may be a case series .

In ecology, a field biologist may notice a six-legged adult frog (Scott 1999, Alvarez et al 2021) — since frogs typically have four legs, the six-legged frog attracts the biologist’s eye and he jots down the circumstances in which the frog were found: relative humidity, air temperature, ground temperature, where the frog was found (on lower leaf of a philodendron plant). A water source near where the frog was found is tested for pH with a meter the biologist carries, and a water sample is taken for later testing of herbicides. The frog is collected so that it can be checked for skin parasites. Upon further inspection, the frog did indeed have parasites known to cause deformities in other frog species. However, note that this example too, is a case study. Although the biologist makes additional observations, any conclusions about why the frog has six legs is anecdotal.

From these case studies, no conclusions can be drawn. We cannot say why the patient failed to respond to treatment, nor can we say why the frog has six legs. Why? Because these are singular events, and a variety of explanations can be given as to their causes — importantly, no controls are available, so there’s no way to distinguish among possibilities.

From such anecdotes, however, experiments can be designed. The physician may decide to recruit additional patients with the diagnosed illness and apply the standard treatment to see if her anecdote is a single, unique event, or more indicative of a problem with the treatment. The biologist may collect other frogs from the area near where he found the six-legged frog and check to see if they, too, have the parasites. If additional patients fail to respond to the treatment, then the singular even is more likely to be a phenomenon. If the normal frogs also have similar levels of the parasite then it is unlikely that these parasites caused the malformed frog. With this simple step (recruiting similar patients, finding additional frogs), we can begin to make inferences about cause and effect and in some cases, to generalize our findings.

This is the objective of most statistical procedures, the concept of sampling from a reference population and making distinctions between groups within the sample. The difference between observational and experimental studies then is how the subjects are selected with respect to the groups. In an experiment, the researcher controls and decides which subject receives the treatment; therefore, allocation to groups is manipulated by the researcher. In contrast, subjects included in an observational study have already been “assigned” to a group, but not by us. Assignment to groups such as smokers or non smokers, Type II diabetes or no diabetes, etc. is done by nature.

Now, I do not wish to imply that research that cannot be generalized back to a reference population are worthless. Far from it. In fact, there is a strong argument for specificity. For example, much basic biological research depends on work in model organisms, which in turn may be further partitioned into specific genetic lines (cf. discussion in Rothman et al 2013). And my goodness, what we have learned about the devastation to oceanic islands like Guam when the brown tree snake was introduced (Fritts and Rodda 1998). Strictly speaking, what has happened to Guam is a case history. But no one would argue that what has happened to Guam cannot happen to Hawaii and other oceanic islands (e.g., United States Federal law 384-108 “Brown Tree Snake Control and Eradication Act of 2004”). In other words, even from case histories, generalizations can sometimes be made.

There can also be real reasons to ignore the issue of generality. One benefit of specificity is experimental control. Transgenetic lines may differ by single gene knockout or by gene duplication, and clearly the aim of such studies is to evaluate the function (hence purpose) of the gene product (or its absence) on some phenotype. In this sense it may not seem important that a transgenic mouse is not representative of a wild outbred mouse population. However, this argument is fundamentally one of expedience — such studies do result in specific results, results that cannot be generalized beyond the strains involved. It ignores the issue of genetic background — all of the genes that affect a trait in addition to the candidate gene under study (Sigmund 2000; Lariviere et al 2001). Transgenic mice of different inbred strains or their hybrids may have very different alleles at other genes that may influence a phenotype; hence, the results of the gene knockdown or other engineering result in different outcomes. Results of genetic manipulations on inbred strains, no matter how sophisticated, mean that the conclusions are strain- or hybrid cross-specific. Thus, although technically and financially difficult, conclusions are better, more generalizable , when conducted with many different inbred lines and verified in outbred mouse populations precisely because genetic background often influences function of single genes (Sigmund 2000; Lariviere et al 2001).

“Causal criteria:” Logic of causation in medicine

This section is in progress. Just a list of key points and references

Throughout this text, emphasis on the power of experimentation is emphasized. Well-designed experiments should consider …

Henle-Koch’s postulates (1877, 1882), developed from working on tuberculosis to report a set of causal criteria to establish link between a microorganism and a disease, are the following:

- The microorganism must be found in abundance in all organisms suffering from the disease, but should not be found in healthy organisms.

- The microorganism must be isolated from a diseased organism and grown in pure culture.

- The cultured microorganism should cause disease when introduced into a healthy organism.

- The microorganism must be reisolated from the inoculated, diseased experimental host and identified as being identical to the original specific causative agent.

Robert Koch wrote these more than 100 years ago, so, clearly, understanding of infectious disease has improved. Evan’s postulates , quoted from A Dictionary of Epidemiology , 5th edition (pp. 86-87):

- Prevalence of the disease should be significantly higher in those exposed to the hypothesized cause than in controls not so exposed.

- Exposure to the hypothesized cause should be more frequent among those with the disease than in controls without the disease—when all other risk factors are held constant.

- Incidence of the disease should be significantly higher in those exposed to the hypothesized cause than in those not so exposed, as shown by prospective studies.

- The disease should follow exposure to the hypothesized causative agent with a normal or log-normal distribution of incubation periods.

- A spectrum of host responses should follow exposure to the hypothesized agent along a logical biological gradient from mild to severe.

- A measurable host response following exposure to the hypothesized cause should have a high probability of appearing in those lacking this before exposure (e.g., antibody, cancer cells) or should increase in magnitude if present before exposure. This response pattern should occur infrequently in persons not so exposed.

- Experimental reproduction of the disease should occur more frequently in animals or humans appropriately exposed to the hypothesized cause than in those not so exposed; this exposure may be deliberate in volunteers, experimentally induced in the laboratory, or may represent a regulation of natural exposure.

- Elimination or modification of the hypothesized cause should decrease the incidence of the disease (e.g., attenuation of a virus, removal of tar from cigarettes).

- Prevention or modification of the host’s response on exposure to the hypothesized cause should decrease or eliminate the disease (e.g., immunization, drugs to lower cholesterol, specific lymphocyte transfer factor in cancer).

- All of the relationships and findings should make biological and epidemiological sense.

Fredericks, D. N., & Relman, D. A. (1996). Sequence-based identification of microbial pathogens: a reconsideration of Koch’s postulates. Clinical microbiology reviews , 9 (1), 18-33.

Correlation (association) does not imply causation, a well-worn truism in any application of critical thinking skills.

Association is the more general term for a possible relationship between two or more variables. A correlation in statistics generally refers to a linear association ( Chapter 16 ); the aforementioned truism should be restated as association does not imply causation.

However, sometimes association does point to a cause. A familiar example is association between tobacco cigarette smoking causes lung cancer. Surgeon General Luthar Terry’s 1964 report (link to document in National Library of Medicine ) presented a strong case linking smoking to elevated risk of lung cancer and coronary artery disease.

Bradford Hill’s guidelines to evaluate causal effects based on epidemiology (Hill 1965, see also Sussar 1999, Fedak et al 2015). They form a set of necessary and sufficient conditions, based on inductive reasoning.

- Strength of association

- Consistency of observed association

- Specificity of association

- Temporal relationship of the association

- Biological gradient, e.g., a dose-response curve

- Biological plausibility

- Coherence, the cause and effect inference should not conflict with what is known about the etiology of a disease.

Follows and extends David Hume’s (1739) causation criteria: association (Hill #1), cause precedes effect (Hill #4), direction of connection.

An obvious objective of research is to reach valid conclusions about fundamental questions. A helpful distinction between the specific and the generalizable experiment is to recognize there are two forms of validity in research: internal validity and external validity (Elwood 2013). Internal validity is the quality of a designed study that determines whether cause and effect can be determined. Random assignment of subjects to treatment groups enhances the internal validity of the study. External validity relates to how general the assessment of cause and effect can be to other populations. Thus, random sampling from a reference population has to do with whether or not the study has external validity.

Additional definitions

We proceed now with definitions. We use the term population in a special and restrictive way in statistics. Our definition includes the one you are already familiar with, but it also means more than that.

Populations are the entire group of individuals that you want to investigate. In statistics, the entire groups is actually the entire class with the observation — so if we are referring to the average body weight of house mice, we’re actually referring to the body weight as the population — it’s a subtle distinction, not essential for our introduction to biostatistics. When we conduct experiments and apply statistical tests on collected data, we generally intend to make inferences (draw conclusions) from our results back to the population.

Population has a strict application in statistics, but the definition also includes our general understanding of the word population. For example, examples of a population in the general sense that one may refer to include:

- the entire human population existing today.

- the entire collection of U.S. citizens.

- all the individuals in an entire species.

- all individuals in a population of a species (e.g., house mice in a dairy barn in Hawai’i).

- all of us in this class room (if we are only interested in us ).

If you could measure the entire population then there would be no need to do (or learn) statistics! Populations usually are in the thousands, millions, or billions of individuals. Here, population is used in the everyday sense that we think of — a collection of individuals that share a characteristic.

A more formal definition of “population” in statistics reads as follows: A statistical population is the complete set of possible measurements on a trait or characteristic corresponding to the entire collection of sampling units for which conclusions are intended.

To conclude, in this class, when we talk about population, we will generally be using it in the everyday sense of the word. However, keep in mind that the definition is more restrictive than that and the key is to identify what sampling units are measured.

Conclusions

This is only the beginning, the basics of experimental design. Entire books are written on the subject, as you can well imagine. We will also return to the subject of Experimental Design throughout the book. We will return to random sampling in Chapter 5.5 . Next we discuss distinctions between experiments and observational studies with respect to sampling of populations.

A bit of a disclaimer here before proceeding; while I cite several papers for examples in experimental design in Chapter 5, readers should not read into this that I am either criticizing or endorsing the published experiments. Experimental design will always have elements of compromise — the trick, of course, is knowing which choices influence validity (Thompson and Panacek 2006).

- reference population

- specific versus general conclusions

- random sampling

- convenience sampling

- haphazard sampling

- research validity

- Revisit our cell experiment, “What is the sampling unit in the following cell experiment?” How would you change this experiment so that there will be biological and not just technical replication?

- All African snails on a staircase at Chaminade University are collected on a Thursday evening.

- All African snails on a staircase at Chaminade University are collected every Thursday evening for six months.

- All African snails on all staircases at Chaminade University are collected.

- African snails are studied in the lab, then returned to the areas from which they were collected. Days later, the researcher collects snails from the same area.

- African snails are studied in the lab, then returned to the areas from which they were collected. Days later, the researcher collects snails from a different area.

- A researcher wishes to study the effects of salt on mosquito larval survival. He works with Aedes species, mosquitos that are characterized as “container-breeding” – their larvae develop where water accumulates in tree holes or indentations in rock, or even in the containers left by humans (e.g., tires, flower vases or planters). His preliminary experiment is outlined in the following table. The last column indicates the measurement that he plans to record. Identify the sampling unit. Identify the experimental unit

- Anecdote study

- Case control study

- Cohort study

- Cross-sectional study

- This next scenario may be evaluated by you for potential sources of bias. Review the list of bias listed above. A researcher wants to do a population count of feral cats on campus. Feral cats are active at night, so he decides to set up a feeding station near a light post. The researcher sits all night in a parked car yards and watches the feeding station for visits by cats. The researcher repeats these observations over the course of a week, moving the feeding station to different campus locations each night, and reports the total number of cats seen during the week as an estimate of the population size. Be able to discuss this study in terms of potential and actual bias.

- 5.1: Experiments Required elements of an experiment, and how they differ from the elements of an observational study. Basic example of an experimental design.

- 5.2: Experimental units and sampling units Introduction to sampling units, experimental units, and the concept of level at which units are independent within an experiment. The problem of pseudoreplication from lack of sufficient independence.

- 5.3: Replication, bias, and nuisance Discussion of replication, bias, and nuisance variables. How each of these can impact the validity of a study.

- 5.4: Clinical trials Outline of the types of clinical trial designs. Brief introduction to the topic of ethics in research with human subjects.

- 5.5: Importance of randomization The importance of randomized selection in study design, in being able to draw generalizable conclusions from the study.

- 5.6: Sampling from populations Methods of sampling from populations, and the impacts of sampling method choice. How to sample in computer programs.

- 5.7: Chapter 5 References

JMP | Statistical Discovery.™ From SAS.

Statistics Knowledge Portal

A free online introduction to statistics

Design of experiments

What is design of experiments.

Design of experiments (DOE) is a systematic, efficient method that enables scientists and engineers to study the relationship between multiple input variables (aka factors) and key output variables (aka responses). It is a structured approach for collecting data and making discoveries.

When to use DOE?

- To determine whether a factor, or a collection of factors, has an effect on the response.

- To determine whether factors interact in their effect on the response.

- To model the behavior of the response as a function of the factors.

- To optimize the response.

Ronald Fisher first introduced four enduring principles of DOE in 1926: the factorial principle, randomization, replication and blocking. Generating and analyzing these designs relied primarily on hand calculation in the past; until recently practitioners started using computer-generated designs for a more effective and efficient DOE.

Why use DOE?

DOE is useful:

- In driving knowledge of cause and effect between factors.

- To experiment with all factors at the same time.

- To run trials that span the potential experimental region for our factors.

- In enabling us to understand the combined effect of the factors.

To illustrate the importance of DOE, let’s look at what will happen if DOE does NOT exist.

Experiments are likely to be carried out via trial and error or one-factor-at-a-time (OFAT) method.

Trial-and-error method

Test different settings of two factors and see what the resulting yield is.

Say we want to determine the optimal temperature and time settings that will maximize yield through experiments.

How the experiment looks like using trial-and-error method:

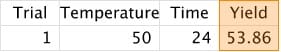

1. Conduct a trial at starting values for the two variables and record the yield:

2. Adjust one or both values based on our results:

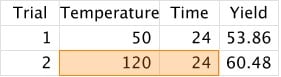

3. Repeat Step 2 until we think we've found the best set of values:

As you can tell, the cons of trial-and-error are:

- Inefficient, unstructured and ad hoc (worst if carried out without subject matter knowledge).

- Unlikely to find the optimum set of conditions across two or more factors.

One factor at a time (OFAT) method

Change the value of the one factor, then measure the response, repeat the process with another factor.

In the same experiment of searching optimal temperature and time to maximize yield, this is how the experiment looks using an OFAT method:

1. Start with temperature: Find the temperature resulting in the highest yield, between 50 and 120 degrees.

1a. Run a total of eight trials. Each trial increases temperature by 10 degrees (i.e., 50, 60, 70 ... all the way to 120 degrees).

1b. With time fixed at 20 hours as a controlled variable.

1c. Measure yield for each batch.

2. Run the second experiment by varying time, to find the optimal value of time (between 4 and 24 hours).

2a. Run a total of six trials. Each trial increases temperature by 4 hours (i.e., 4, 8, 12… up to 24 hours).

2b. With temperature fixed at 90 degrees as a controlled variable.

2c. Measure yield for each batch.

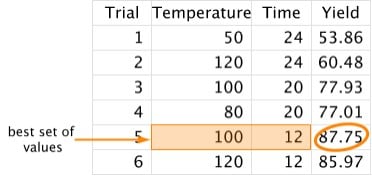

3. After a total of 14 trials, we’ve identified the max yield (86.7%) happens when:

- Temperature is at 90 degrees; Time is at 12 hours.

As you can already tell, OFAT is a more structured approach compared to trial and error.

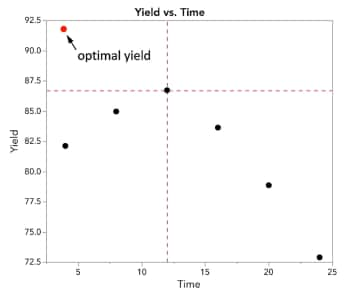

But there’s one major problem with OFAT : What if the optimal temperature and time settings look more like this?

We would have missed out acquiring the optimal temperature and time settings based on our previous OFAT experiments.

Therefore, OFAT’s con is:

- We’re unlikely to find the optimum set of conditions across two or more factors.

How our trial and error and OFAT experiments look:

Notice that none of them has trials conducted at a low temperature and time AND near optimum conditions.

What went wrong in the experiments?

- We didn't simultaneously change the settings of both factors.

- We didn't conduct trials throughout the potential experimental region.

The result was a lack of understanding on the combined effect of the two variables on the response. The two factors did interact in their effect on the response!

A more effective and efficient approach to experimentation is to use statistically designed experiments (DOE).

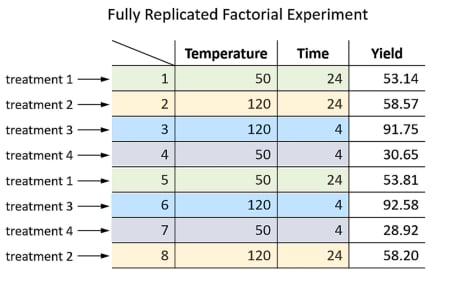

Apply Full Factorial DOE on the same example

1. Experiment with two factors, each factor with two values.

These four trials form the corners of the design space:

2. Run all possible combinations of factor levels, in random order to average out effects of lurking variables .

3. (Optional) Replicate entire design by running each treatment twice to find out experimental error :

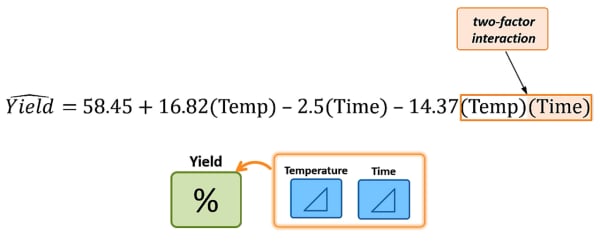

4. Analyzing the results enable us to build a statistical model that estimates the individual effects (Temperature & Time), and also their interaction.

It enables us to visualize and explore the interaction between the factors. An illustration of what their interaction looks like at temperature = 120; time = 4:

You can visualize, explore your model and find the most desirable settings for your factors using the JMP Prediction Profiler .

Summary: DOE vs. OFAT/Trial-and-Error

- DOE requires fewer trials.

- DOE is more effective in finding the best settings to maximize yield.

- DOE enables us to derive a statistical model to predict results as a function of the two factors and their combined effect.

IMAGES

COMMENTS

Chapter 1 - Sampling and Experimental Design Read sections 1.3 - 1.5 Sampling (1.3.3 and 1.4.2) Sampling Plans: methods of selecting individuals from a population. We are interested in sampling plans such that results from the sample can be used to make conclusions about the population.

If you are interested in learning more about sampling techniques in experimental research, there are a variety of resources available to you. Some useful places to start include: Books "Experimental Design and Analysis: An Introduction" by David Blaxter "The Practice of Statistics in the Sciences" by Geoff Cumming and Chris Wallace

The use of a sequence of experiments, where the design of each may depend on the results of previous experiments, including the possible decision to stop experimenting, is within the scope of sequential analysis, a field that was pioneered [12] by Abraham Wald in the context of sequential tests of statistical hypotheses. [13] Herman Chernoff wrote an overview of optimal sequential designs, [14 ...

Design of experiment means how to design an experiment in the sense that how the observations or ... The object that is measured in an experiment is called the sampling unit. This may be different from the ... having the same variances, the analysis of variance technique can be used. Note that such techniques are based on certain statistical ...

sampling; and (2) design and analysis of experiments. More advanced topics will be covered in Stat-454: Sampling Theory and Practice and Stat-430: Experimental Design. 1.1 Population Statisticians are preoccupied with tasks of modeling random phenomena in the real world. The randomness as most of us understood, generally points to

An example of pseudo-Monte Carlo sampling in a two-dimensional design space. The sample sites (stars) are randomly placed in the interval [0,1] 2 .

The use of chance to allocate experimental units into groups is called randomization. Randomization is the major principle of the statistical design of experiments. Randomization produces groups of experimental units that are more likely to be similar in all respects before the treatments are applied than using non-random methods. At

Required elements of an experiment, and how they differ from the elements of an observational study. Basic example of an experimental design. 5.2: Experimental units and sampling units Introduction to sampling units, experimental units, and the concept of level at which units are independent within an experiment.

What is design of experiments? Design of experiments (DOE) is a systematic, efficient method that enables scientists and engineers to study the relationship between multiple input variables (aka factors) and key output variables (aka responses). It is a structured approach for collecting data and making discoveries. When to use DOE?

Design the experiments with principles of design Carry out the analysis for the design of experiments Unit-I Sampling Theory: Principle steps in a sample survey, Censes versus sample survey, sampling and Non-sampling errors. Types of sampling -subjective, probability and mixed sampling methods. Unit-II Simple Random Sampling:Meaning of Samples ...