- How it works

"Christmas Offer"

Terms & conditions.

As the Christmas season is upon us, we find ourselves reflecting on the past year and those who we have helped to shape their future. It’s been quite a year for us all! The end of the year brings no greater joy than the opportunity to express to you Christmas greetings and good wishes.

At this special time of year, Research Prospect brings joyful discount of 10% on all its services. May your Christmas and New Year be filled with joy.

We are looking back with appreciation for your loyalty and looking forward to moving into the New Year together.

"Claim this offer"

In unfamiliar and hard times, we have stuck by you. This Christmas, Research Prospect brings you all the joy with exciting discount of 10% on all its services.

Offer valid till 5-1-2024

We love being your partner in success. We know you have been working hard lately, take a break this holiday season to spend time with your loved ones while we make sure you succeed in your academics

Discount code: RP0996Y

Hypothesis Testing – A Complete Guide with Examples

Published by Alvin Nicolas at August 14th, 2021 , Revised On October 26, 2023

In statistics, hypothesis testing is a critical tool. It allows us to make informed decisions about populations based on sample data. Whether you are a researcher trying to prove a scientific point, a marketer analysing A/B test results, or a manufacturer ensuring quality control, hypothesis testing plays a pivotal role. This guide aims to introduce you to the concept and walk you through real-world examples.

What is a Hypothesis and a Hypothesis Testing?

A hypothesis is considered a belief or assumption that has to be accepted, rejected, proved or disproved. In contrast, a research hypothesis is a research question for a researcher that has to be proven correct or incorrect through investigation.

What is Hypothesis Testing?

Hypothesis testing is a scientific method used for making a decision and drawing conclusions by using a statistical approach. It is used to suggest new ideas by testing theories to know whether or not the sample data supports research. A research hypothesis is a predictive statement that has to be tested using scientific methods that join an independent variable to a dependent variable.

Example: The academic performance of student A is better than student B

Characteristics of the Hypothesis to be Tested

A hypothesis should be:

- Clear and precise

- Capable of being tested

- Able to relate to a variable

- Stated in simple terms

- Consistent with known facts

- Limited in scope and specific

- Tested in a limited timeframe

- Explain the facts in detail

What is a Null Hypothesis and Alternative Hypothesis?

A null hypothesis is a hypothesis when there is no significant relationship between the dependent and the participants’ independent variables .

In simple words, it’s a hypothesis that has been put forth but hasn’t been proved as yet. A researcher aims to disprove the theory. The abbreviation “Ho” is used to denote a null hypothesis.

If you want to compare two methods and assume that both methods are equally good, this assumption is considered the null hypothesis.

Example: In an automobile trial, you feel that the new vehicle’s mileage is similar to the previous model of the car, on average. You can write it as: Ho: there is no difference between the mileage of both vehicles. If your findings don’t support your hypothesis and you get opposite results, this outcome will be considered an alternative hypothesis.

If you assume that one method is better than another method, then it’s considered an alternative hypothesis. The alternative hypothesis is the theory that a researcher seeks to prove and is typically denoted by H1 or HA.

If you support a null hypothesis, it means you’re not supporting the alternative hypothesis. Similarly, if you reject a null hypothesis, it means you are recommending the alternative hypothesis.

Example: In an automobile trial, you feel that the new vehicle’s mileage is better than the previous model of the vehicle. You can write it as; Ha: the two vehicles have different mileage. On average/ the fuel consumption of the new vehicle model is better than the previous model.

If a null hypothesis is rejected during the hypothesis test, even if it’s true, then it is considered as a type-I error. On the other hand, if you don’t dismiss a hypothesis, even if it’s false because you could not identify its falseness, it’s considered a type-II error.

Hire an Expert Researcher

Orders completed by our expert writers are

- Formally drafted in academic style

- 100% Plagiarism free & 100% Confidential

- Never resold

- Include unlimited free revisions

- Completed to match exact client requirements

How to Conduct Hypothesis Testing?

Here is a step-by-step guide on how to conduct hypothesis testing.

Step 1: State the Null and Alternative Hypothesis

Once you develop a research hypothesis, it’s important to state it is as a Null hypothesis (Ho) and an Alternative hypothesis (Ha) to test it statistically.

A null hypothesis is a preferred choice as it provides the opportunity to test the theory. In contrast, you can accept the alternative hypothesis when the null hypothesis has been rejected.

Example: You want to identify a relationship between obesity of men and women and the modern living style. You develop a hypothesis that women, on average, gain weight quickly compared to men. Then you write it as: Ho: Women, on average, don’t gain weight quickly compared to men. Ha: Women, on average, gain weight quickly compared to men.

Step 2: Data Collection

Hypothesis testing follows the statistical method, and statistics are all about data. It’s challenging to gather complete information about a specific population you want to study. You need to gather the data obtained through a large number of samples from a specific population.

Example: Suppose you want to test the difference in the rate of obesity between men and women. You should include an equal number of men and women in your sample. Then investigate various aspects such as their lifestyle, eating patterns and profession, and any other variables that may influence average weight. You should also determine your study’s scope, whether it applies to a specific group of population or worldwide population. You can use available information from various places, countries, and regions.

Step 3: Select Appropriate Statistical Test

There are many types of statistical tests , but we discuss the most two common types below, such as One-sided and two-sided tests.

Note: Your choice of the type of test depends on the purpose of your study

One-sided Test

In the one-sided test, the values of rejecting a null hypothesis are located in one tail of the probability distribution. The set of values is less or higher than the critical value of the test. It is also called a one-tailed test of significance.

Example: If you want to test that all mangoes in a basket are ripe. You can write it as: Ho: All mangoes in the basket, on average, are ripe. If you find all ripe mangoes in the basket, the null hypothesis you developed will be true.

Two-sided Test

In the two-sided test, the values of rejecting a null hypothesis are located on both tails of the probability distribution. The set of values is less or higher than the first critical value of the test and higher than the second critical value test. It is also called a two-tailed test of significance.

Example: Nothing can be explicitly said whether all mangoes are ripe in the basket. If you reject the null hypothesis (Ho: All mangoes in the basket, on average, are ripe), then it means all mangoes in the basket are not likely to be ripe. A few mangoes could be raw as well.

Get statistical analysis help at an affordable price

- An expert statistician will complete your work

- Rigorous quality checks

- Confidentiality and reliability

- Any statistical software of your choice

- Free Plagiarism Report

Step 4: Select the Level of Significance

When you reject a null hypothesis, even if it’s true during a statistical hypothesis, it is considered the significance level . It is the probability of a type one error. The significance should be as minimum as possible to avoid the type-I error, which is considered severe and should be avoided.

If the significance level is minimum, then it prevents the researchers from false claims.

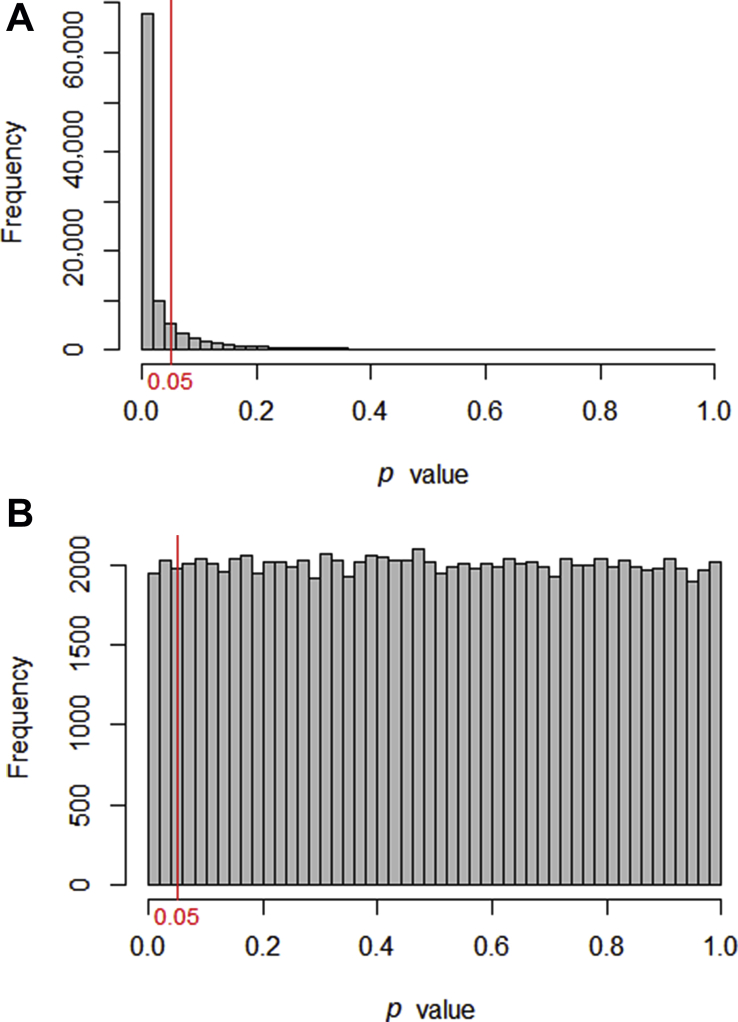

The significance level is denoted by P, and it has given the value of 0.05 (P=0.05)

If the P-Value is less than 0.05, then the difference will be significant. If the P-value is higher than 0.05, then the difference is non-significant.

Example: Suppose you apply a one-sided test to test whether women gain weight quickly compared to men. You get to know about the average weight between men and women and the factors promoting weight gain.

Step 5: Find out Whether the Null Hypothesis is Rejected or Supported

After conducting a statistical test, you should identify whether your null hypothesis is rejected or accepted based on the test results. It would help if you observed the P-value for this.

Example: If you find the P-value of your test is less than 0.5/5%, then you need to reject your null hypothesis (Ho: Women, on average, don’t gain weight quickly compared to men). On the other hand, if a null hypothesis is rejected, then it means the alternative hypothesis might be true (Ha: Women, on average, gain weight quickly compared to men. If you find your test’s P-value is above 0.5/5%, then it means your null hypothesis is true.

Step 6: Present the Outcomes of your Study

The final step is to present the outcomes of your study . You need to ensure whether you have met the objectives of your research or not.

In the discussion section and conclusion , you can present your findings by using supporting evidence and conclude whether your null hypothesis was rejected or supported.

In the result section, you can summarise your study’s outcomes, including the average difference and P-value of the two groups.

If we talk about the findings, our study your results will be as follows:

Example: In the study of identifying whether women gain weight quickly compared to men, we found the P-value is less than 0.5. Hence, we can reject the null hypothesis (Ho: Women, on average, don’t gain weight quickly than men) and conclude that women may likely gain weight quickly than men.

Did you know in your academic paper you should not mention whether you have accepted or rejected the null hypothesis?

Always remember that you either conclude to reject Ho in favor of Haor do not reject Ho . It would help if you never rejected Ha or even accept Ha .

Suppose your null hypothesis is rejected in the hypothesis testing. If you conclude reject Ho in favor of Haor do not reject Ho, then it doesn’t mean that the null hypothesis is true. It only means that there is a lack of evidence against Ho in favour of Ha. If your null hypothesis is not true, then the alternative hypothesis is likely to be true.

Example: We found that the P-value is less than 0.5. Hence, we can conclude reject Ho in favour of Ha (Ho: Women, on average, don’t gain weight quickly than men) reject Ho in favour of Ha. However, rejected in favour of Ha means (Ha: women may likely to gain weight quickly than men)

Frequently Asked Questions

What are the 3 types of hypothesis test.

The 3 types of hypothesis tests are:

- One-Sample Test : Compare sample data to a known population value.

- Two-Sample Test : Compare means between two sample groups.

- ANOVA : Analyze variance among multiple groups to determine significant differences.

What is a hypothesis?

A hypothesis is a proposed explanation or prediction about a phenomenon, often based on observations. It serves as a starting point for research or experimentation, providing a testable statement that can either be supported or refuted through data and analysis. In essence, it’s an educated guess that drives scientific inquiry.

What are null hypothesis?

A null hypothesis (often denoted as H0) suggests that there is no effect or difference in a study or experiment. It represents a default position or status quo. Statistical tests evaluate data to determine if there’s enough evidence to reject this null hypothesis.

What is the probability value?

The probability value, or p-value, is a measure used in statistics to determine the significance of an observed effect. It indicates the probability of obtaining the observed results, or more extreme, if the null hypothesis were true. A small p-value (typically <0.05) suggests evidence against the null hypothesis, warranting its rejection.

What is p value?

The p-value is a fundamental concept in statistical hypothesis testing. It represents the probability of observing a test statistic as extreme, or more so, than the one calculated from sample data, assuming the null hypothesis is true. A low p-value suggests evidence against the null, possibly justifying its rejection.

What is a t test?

A t-test is a statistical test used to compare the means of two groups. It determines if observed differences between the groups are statistically significant or if they likely occurred by chance. Commonly applied in research, there are different t-tests, including independent, paired, and one-sample, tailored to various data scenarios.

When to reject null hypothesis?

Reject the null hypothesis when the test statistic falls into a predefined rejection region or when the p-value is less than the chosen significance level (commonly 0.05). This suggests that the observed data is unlikely under the null hypothesis, indicating evidence for the alternative hypothesis. Always consider the study’s context.

You May Also Like

A meta-analysis is a formal, epidemiological, quantitative study design that uses statistical methods to generalise the findings of the selected independent studies.

Experimental research refers to the experiments conducted in the laboratory or under observation in controlled conditions. Here is all you need to know about experimental research.

A survey includes questions relevant to the research topic. The participants are selected, and the questionnaire is distributed to collect the data.

As Featured On

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

Splash Sol LLC

- How It Works

- Hypothesis Testing: Definition, Uses, Limitations + Examples

Hypothesis testing is as old as the scientific method and is at the heart of the research process.

Research exists to validate or disprove assumptions about various phenomena. The process of validation involves testing and it is in this context that we will explore hypothesis testing.

What is a Hypothesis?

A hypothesis is a calculated prediction or assumption about a population parameter based on limited evidence. The whole idea behind hypothesis formulation is testing—this means the researcher subjects his or her calculated assumption to a series of evaluations to know whether they are true or false.

Typically, every research starts with a hypothesis—the investigator makes a claim and experiments to prove that this claim is true or false . For instance, if you predict that students who drink milk before class perform better than those who don’t, then this becomes a hypothesis that can be confirmed or refuted using an experiment.

Read: What is Empirical Research Study? [Examples & Method]

What are the Types of Hypotheses?

1. simple hypothesis.

Also known as a basic hypothesis, a simple hypothesis suggests that an independent variable is responsible for a corresponding dependent variable. In other words, an occurrence of the independent variable inevitably leads to an occurrence of the dependent variable.

Typically, simple hypotheses are considered as generally true, and they establish a causal relationship between two variables.

Examples of Simple Hypothesis

- Drinking soda and other sugary drinks can cause obesity.

- Smoking cigarettes daily leads to lung cancer.

2. Complex Hypothesis

A complex hypothesis is also known as a modal. It accounts for the causal relationship between two independent variables and the resulting dependent variables. This means that the combination of the independent variables leads to the occurrence of the dependent variables .

Examples of Complex Hypotheses

- Adults who do not smoke and drink are less likely to develop liver-related conditions.

- Global warming causes icebergs to melt which in turn causes major changes in weather patterns.

3. Null Hypothesis

As the name suggests, a null hypothesis is formed when a researcher suspects that there’s no relationship between the variables in an observation. In this case, the purpose of the research is to approve or disapprove this assumption.

Examples of Null Hypothesis

- This is no significant change in a student’s performance if they drink coffee or tea before classes.

- There’s no significant change in the growth of a plant if one uses distilled water only or vitamin-rich water.

Read: Research Report: Definition, Types + [Writing Guide]

4. Alternative Hypothesis

To disapprove a null hypothesis, the researcher has to come up with an opposite assumption—this assumption is known as the alternative hypothesis. This means if the null hypothesis says that A is false, the alternative hypothesis assumes that A is true.

An alternative hypothesis can be directional or non-directional depending on the direction of the difference. A directional alternative hypothesis specifies the direction of the tested relationship, stating that one variable is predicted to be larger or smaller than the null value while a non-directional hypothesis only validates the existence of a difference without stating its direction.

Examples of Alternative Hypotheses

- Starting your day with a cup of tea instead of a cup of coffee can make you more alert in the morning.

- The growth of a plant improves significantly when it receives distilled water instead of vitamin-rich water.

5. Logical Hypothesis

Logical hypotheses are some of the most common types of calculated assumptions in systematic investigations. It is an attempt to use your reasoning to connect different pieces in research and build a theory using little evidence. In this case, the researcher uses any data available to him, to form a plausible assumption that can be tested.

Examples of Logical Hypothesis

- Waking up early helps you to have a more productive day.

- Beings from Mars would not be able to breathe the air in the atmosphere of the Earth.

6. Empirical Hypothesis

After forming a logical hypothesis, the next step is to create an empirical or working hypothesis. At this stage, your logical hypothesis undergoes systematic testing to prove or disprove the assumption. An empirical hypothesis is subject to several variables that can trigger changes and lead to specific outcomes.

Examples of Empirical Testing

- People who eat more fish run faster than people who eat meat.

- Women taking vitamin E grow hair faster than those taking vitamin K.

7. Statistical Hypothesis

When forming a statistical hypothesis, the researcher examines the portion of a population of interest and makes a calculated assumption based on the data from this sample. A statistical hypothesis is most common with systematic investigations involving a large target audience. Here, it’s impossible to collect responses from every member of the population so you have to depend on data from your sample and extrapolate the results to the wider population.

Examples of Statistical Hypothesis

- 45% of students in Louisiana have middle-income parents.

- 80% of the UK’s population gets a divorce because of irreconcilable differences.

What is Hypothesis Testing?

Hypothesis testing is an assessment method that allows researchers to determine the plausibility of a hypothesis. It involves testing an assumption about a specific population parameter to know whether it’s true or false. These population parameters include variance, standard deviation, and median.

Typically, hypothesis testing starts with developing a null hypothesis and then performing several tests that support or reject the null hypothesis. The researcher uses test statistics to compare the association or relationship between two or more variables.

Explore: Research Bias: Definition, Types + Examples

Researchers also use hypothesis testing to calculate the coefficient of variation and determine if the regression relationship and the correlation coefficient are statistically significant.

How Hypothesis Testing Works

The basis of hypothesis testing is to examine and analyze the null hypothesis and alternative hypothesis to know which one is the most plausible assumption. Since both assumptions are mutually exclusive, only one can be true. In other words, the occurrence of a null hypothesis destroys the chances of the alternative coming to life, and vice-versa.

Interesting: 21 Chrome Extensions for Academic Researchers in 2021

What Are The Stages of Hypothesis Testing?

To successfully confirm or refute an assumption, the researcher goes through five (5) stages of hypothesis testing;

- Determine the null hypothesis

- Specify the alternative hypothesis

- Set the significance level

- Calculate the test statistics and corresponding P-value

- Draw your conclusion

- Determine the Null Hypothesis

Like we mentioned earlier, hypothesis testing starts with creating a null hypothesis which stands as an assumption that a certain statement is false or implausible. For example, the null hypothesis (H0) could suggest that different subgroups in the research population react to a variable in the same way.

- Specify the Alternative Hypothesis

Once you know the variables for the null hypothesis, the next step is to determine the alternative hypothesis. The alternative hypothesis counters the null assumption by suggesting the statement or assertion is true. Depending on the purpose of your research, the alternative hypothesis can be one-sided or two-sided.

Using the example we established earlier, the alternative hypothesis may argue that the different sub-groups react differently to the same variable based on several internal and external factors.

- Set the Significance Level

Many researchers create a 5% allowance for accepting the value of an alternative hypothesis, even if the value is untrue. This means that there is a 0.05 chance that one would go with the value of the alternative hypothesis, despite the truth of the null hypothesis.

Something to note here is that the smaller the significance level, the greater the burden of proof needed to reject the null hypothesis and support the alternative hypothesis.

Explore: What is Data Interpretation? + [Types, Method & Tools]

- Calculate the Test Statistics and Corresponding P-Value

Test statistics in hypothesis testing allow you to compare different groups between variables while the p-value accounts for the probability of obtaining sample statistics if your null hypothesis is true. In this case, your test statistics can be the mean, median and similar parameters.

If your p-value is 0.65, for example, then it means that the variable in your hypothesis will happen 65 in100 times by pure chance. Use this formula to determine the p-value for your data:

- Draw Your Conclusions

After conducting a series of tests, you should be able to agree or refute the hypothesis based on feedback and insights from your sample data.

Applications of Hypothesis Testing in Research

Hypothesis testing isn’t only confined to numbers and calculations; it also has several real-life applications in business, manufacturing, advertising, and medicine.

In a factory or other manufacturing plants, hypothesis testing is an important part of quality and production control before the final products are approved and sent out to the consumer.

During ideation and strategy development, C-level executives use hypothesis testing to evaluate their theories and assumptions before any form of implementation. For example, they could leverage hypothesis testing to determine whether or not some new advertising campaign, marketing technique, etc. causes increased sales.

In addition, hypothesis testing is used during clinical trials to prove the efficacy of a drug or new medical method before its approval for widespread human usage.

What is an Example of Hypothesis Testing?

An employer claims that her workers are of above-average intelligence. She takes a random sample of 20 of them and gets the following results:

Mean IQ Scores: 110

Standard Deviation: 15

Mean Population IQ: 100

Step 1: Using the value of the mean population IQ, we establish the null hypothesis as 100.

Step 2: State that the alternative hypothesis is greater than 100.

Step 3: State the alpha level as 0.05 or 5%

Step 4: Find the rejection region area (given by your alpha level above) from the z-table. An area of .05 is equal to a z-score of 1.645.

Step 5: Calculate the test statistics using this formula

Z = (110–100) ÷ (15÷√20)

10 ÷ 3.35 = 2.99

If the value of the test statistics is higher than the value of the rejection region, then you should reject the null hypothesis. If it is less, then you cannot reject the null.

In this case, 2.99 > 1.645 so we reject the null.

Importance/Benefits of Hypothesis Testing

The most significant benefit of hypothesis testing is it allows you to evaluate the strength of your claim or assumption before implementing it in your data set. Also, hypothesis testing is the only valid method to prove that something “is or is not”. Other benefits include:

- Hypothesis testing provides a reliable framework for making any data decisions for your population of interest.

- It helps the researcher to successfully extrapolate data from the sample to the larger population.

- Hypothesis testing allows the researcher to determine whether the data from the sample is statistically significant.

- Hypothesis testing is one of the most important processes for measuring the validity and reliability of outcomes in any systematic investigation.

- It helps to provide links to the underlying theory and specific research questions.

Criticism and Limitations of Hypothesis Testing

Several limitations of hypothesis testing can affect the quality of data you get from this process. Some of these limitations include:

- The interpretation of a p-value for observation depends on the stopping rule and definition of multiple comparisons. This makes it difficult to calculate since the stopping rule is subject to numerous interpretations, plus “multiple comparisons” are unavoidably ambiguous.

- Conceptual issues often arise in hypothesis testing, especially if the researcher merges Fisher and Neyman-Pearson’s methods which are conceptually distinct.

- In an attempt to focus on the statistical significance of the data, the researcher might ignore the estimation and confirmation by repeated experiments.

- Hypothesis testing can trigger publication bias, especially when it requires statistical significance as a criterion for publication.

- When used to detect whether a difference exists between groups, hypothesis testing can trigger absurd assumptions that affect the reliability of your observation.

Connect to Formplus, Get Started Now - It's Free!

- alternative hypothesis

- alternative vs null hypothesis

- complex hypothesis

- empirical hypothesis

- hypothesis testing

- logical hypothesis

- simple hypothesis

- statistical hypothesis

- busayo.longe

You may also like:

Internal Validity in Research: Definition, Threats, Examples

In this article, we will discuss the concept of internal validity, some clear examples, its importance, and how to test it.

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Type I vs Type II Errors: Causes, Examples & Prevention

This article will discuss the two different types of errors in hypothesis testing and how you can prevent them from occurring in your research

Alternative vs Null Hypothesis: Pros, Cons, Uses & Examples

We are going to discuss alternative hypotheses and null hypotheses in this post and how they work in research.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

- Python for Data Science

- Data Analysis

- Machine Learning

- Deep Learning

- Deep Learning Interview Questions

- ML Projects

- ML Interview Questions

Understanding Hypothesis Testing

Hypothesis testing is a fundamental statistical method employed in various fields, including data science , machine learning , and statistics , to make informed decisions based on empirical evidence. It involves formulating assumptions about population parameters using sample statistics and rigorously evaluating these assumptions against collected data. At its core, hypothesis testing is a systematic approach that allows researchers to assess the validity of a statistical claim about an unknown population parameter. This article sheds light on the significance of hypothesis testing and the critical steps involved in the process.

Table of Content

What is Hypothesis Testing?

Why do we use hypothesis testing, one-tailed and two-tailed test, what are type 1 and type 2 errors in hypothesis testing, how does hypothesis testing work, real life examples of hypothesis testing, limitations of hypothesis testing.

A hypothesis is an assumption or idea, specifically a statistical claim about an unknown population parameter. For example, a judge assumes a person is innocent and verifies this by reviewing evidence and hearing testimony before reaching a verdict.

Hypothesis testing is a statistical method that is used to make a statistical decision using experimental data. Hypothesis testing is basically an assumption that we make about a population parameter. It evaluates two mutually exclusive statements about a population to determine which statement is best supported by the sample data.

To test the validity of the claim or assumption about the population parameter:

- A sample is drawn from the population and analyzed.

- The results of the analysis are used to decide whether the claim is true or not.

Example: You say an average height in the class is 30 or a boy is taller than a girl. All of these is an assumption that we are assuming, and we need some statistical way to prove these. We need some mathematical conclusion whatever we are assuming is true.

This structured approach to hypothesis testing in data science , hypothesis testing in machine learning , and hypothesis testing in statistics is crucial for making informed decisions based on data.

- By employing hypothesis testing in data analytics and other fields, practitioners can rigorously evaluate their assumptions and derive meaningful insights from their analyses.

- Understanding hypothesis generation and testing is also essential for effectively implementing statistical hypothesis testing in various applications.

Defining Hypotheses

- Null hypothesis (H 0 ): In statistics, the null hypothesis is a general statement or default position that there is no relationship between two measured cases or no relationship among groups. In other words, it is a basic assumption or made based on the problem knowledge. Example : A company’s mean production is 50 units/per da H 0 : [Tex]\mu [/Tex] = 50.

- Alternative hypothesis (H 1 ): The alternative hypothesis is the hypothesis used in hypothesis testing that is contrary to the null hypothesis. Example: A company’s production is not equal to 50 units/per day i.e. H 1 : [Tex]\mu [/Tex] [Tex]\ne [/Tex] 50.

Key Terms of Hypothesis Testing

- Level of significance : It refers to the degree of significance in which we accept or reject the null hypothesis. 100% accuracy is not possible for accepting a hypothesis, so we, therefore, select a level of significance that is usually 5%. This is normally denoted with [Tex]\alpha[/Tex] and generally, it is 0.05 or 5%, which means your output should be 95% confident to give a similar kind of result in each sample.

- P-value: The P value , or calculated probability, is the probability of finding the observed/extreme results when the null hypothesis(H0) of a study-given problem is true. If your P-value is less than the chosen significance level then you reject the null hypothesis i.e. accept that your sample claims to support the alternative hypothesis.

- Test Statistic: The test statistic is a numerical value calculated from sample data during a hypothesis test, used to determine whether to reject the null hypothesis. It is compared to a critical value or p-value to make decisions about the statistical significance of the observed results.

- Critical value : The critical value in statistics is a threshold or cutoff point used to determine whether to reject the null hypothesis in a hypothesis test.

- Degrees of freedom: Degrees of freedom are associated with the variability or freedom one has in estimating a parameter. The degrees of freedom are related to the sample size and determine the shape.

Hypothesis testing is an important procedure in statistics. Hypothesis testing evaluates two mutually exclusive population statements to determine which statement is most supported by sample data. When we say that the findings are statistically significant, thanks to hypothesis testing.

Understanding hypothesis testing in statistics is essential for data scientists and machine learning practitioners, as it provides a structured framework for statistical hypothesis generation and testing. This methodology can also be applied in hypothesis testing in Python , enabling data analysts to perform robust statistical analyses efficiently. By employing techniques such as multiple hypothesis testing in machine learning , researchers can ensure more reliable results and avoid potential pitfalls associated with drawing conclusions from statistical tests.

One tailed test focuses on one direction, either greater than or less than a specified value. We use a one-tailed test when there is a clear directional expectation based on prior knowledge or theory. The critical region is located on only one side of the distribution curve. If the sample falls into this critical region, the null hypothesis is rejected in favor of the alternative hypothesis.

One-Tailed Test

There are two types of one-tailed test:

- Left-Tailed (Left-Sided) Test: The alternative hypothesis asserts that the true parameter value is less than the null hypothesis. Example: H 0 : [Tex]\mu \geq 50 [/Tex] and H 1 : [Tex]\mu < 50 [/Tex]

- Right-Tailed (Right-Sided) Test : The alternative hypothesis asserts that the true parameter value is greater than the null hypothesis. Example: H 0 : [Tex]\mu \leq50 [/Tex] and H 1 : [Tex]\mu > 50 [/Tex]

Two-Tailed Test

A two-tailed test considers both directions, greater than and less than a specified value.We use a two-tailed test when there is no specific directional expectation, and want to detect any significant difference.

Example: H 0 : [Tex]\mu = [/Tex] 50 and H 1 : [Tex]\mu \neq 50 [/Tex]

To delve deeper into differences into both types of test: Refer to link

In hypothesis testing, Type I and Type II errors are two possible errors that researchers can make when drawing conclusions about a population based on a sample of data. These errors are associated with the decisions made regarding the null hypothesis and the alternative hypothesis.

- Type I error: When we reject the null hypothesis, although that hypothesis was true. Type I error is denoted by alpha( [Tex]\alpha [/Tex] ).

- Type II errors : When we accept the null hypothesis, but it is false. Type II errors are denoted by beta( [Tex]\beta [/Tex] ).

Step 1: Define Null and Alternative Hypothesis

State the null hypothesis ( [Tex]H_0 [/Tex] ), representing no effect, and the alternative hypothesis ( [Tex]H_1 [/Tex] ), suggesting an effect or difference.

We first identify the problem about which we want to make an assumption keeping in mind that our assumption should be contradictory to one another, assuming Normally distributed data.

Step 2 – Choose significance level

Select a significance level ( [Tex]\alpha [/Tex] ), typically 0.05, to determine the threshold for rejecting the null hypothesis. It provides validity to our hypothesis test, ensuring that we have sufficient data to back up our claims. Usually, we determine our significance level beforehand of the test. The p-value is the criterion used to calculate our significance value.

Step 3 – Collect and Analyze data.

Gather relevant data through observation or experimentation. Analyze the data using appropriate statistical methods to obtain a test statistic.

Step 4-Calculate Test Statistic

The data for the tests are evaluated in this step we look for various scores based on the characteristics of data. The choice of the test statistic depends on the type of hypothesis test being conducted.

There are various hypothesis tests, each appropriate for various goal to calculate our test. This could be a Z-test , Chi-square , T-test , and so on.

- Z-test : If population means and standard deviations are known. Z-statistic is commonly used.

- t-test : If population standard deviations are unknown. and sample size is small than t-test statistic is more appropriate.

- Chi-square test : Chi-square test is used for categorical data or for testing independence in contingency tables

- F-test : F-test is often used in analysis of variance (ANOVA) to compare variances or test the equality of means across multiple groups.

We have a smaller dataset, So, T-test is more appropriate to test our hypothesis.

T-statistic is a measure of the difference between the means of two groups relative to the variability within each group. It is calculated as the difference between the sample means divided by the standard error of the difference. It is also known as the t-value or t-score.

Step 5 – Comparing Test Statistic:

In this stage, we decide where we should accept the null hypothesis or reject the null hypothesis. There are two ways to decide where we should accept or reject the null hypothesis.

Method A: Using Crtical values

Comparing the test statistic and tabulated critical value we have,

- If Test Statistic>Critical Value: Reject the null hypothesis.

- If Test Statistic≤Critical Value: Fail to reject the null hypothesis.

Note: Critical values are predetermined threshold values that are used to make a decision in hypothesis testing. To determine critical values for hypothesis testing, we typically refer to a statistical distribution table , such as the normal distribution or t-distribution tables based on.

Method B: Using P-values

We can also come to an conclusion using the p-value,

- If the p-value is less than or equal to the significance level i.e. ( [Tex]p\leq\alpha [/Tex] ), you reject the null hypothesis. This indicates that the observed results are unlikely to have occurred by chance alone, providing evidence in favor of the alternative hypothesis.

- If the p-value is greater than the significance level i.e. ( [Tex]p\geq \alpha[/Tex] ), you fail to reject the null hypothesis. This suggests that the observed results are consistent with what would be expected under the null hypothesis.

Note : The p-value is the probability of obtaining a test statistic as extreme as, or more extreme than, the one observed in the sample, assuming the null hypothesis is true. To determine p-value for hypothesis testing, we typically refer to a statistical distribution table , such as the normal distribution or t-distribution tables based on.

Step 7- Interpret the Results

At last, we can conclude our experiment using method A or B.

Calculating test statistic

To validate our hypothesis about a population parameter we use statistical functions . We use the z-score, p-value, and level of significance(alpha) to make evidence for our hypothesis for normally distributed data .

1. Z-statistics:

When population means and standard deviations are known.

[Tex]z = \frac{\bar{x} – \mu}{\frac{\sigma}{\sqrt{n}}}[/Tex]

- [Tex]\bar{x} [/Tex] is the sample mean,

- μ represents the population mean,

- σ is the standard deviation

- and n is the size of the sample.

2. T-Statistics

T test is used when n<30,

t-statistic calculation is given by:

[Tex]t=\frac{x̄-μ}{s/\sqrt{n}} [/Tex]

- t = t-score,

- x̄ = sample mean

- μ = population mean,

- s = standard deviation of the sample,

- n = sample size

3. Chi-Square Test

Chi-Square Test for Independence categorical Data (Non-normally distributed) using:

[Tex]\chi^2 = \sum \frac{(O_{ij} – E_{ij})^2}{E_{ij}}[/Tex]

- [Tex]O_{ij}[/Tex] is the observed frequency in cell [Tex]{ij} [/Tex]

- i,j are the rows and columns index respectively.

- [Tex]E_{ij}[/Tex] is the expected frequency in cell [Tex]{ij}[/Tex] , calculated as : [Tex]\frac{{\text{{Row total}} \times \text{{Column total}}}}{{\text{{Total observations}}}}[/Tex]

Let’s examine hypothesis testing using two real life situations,

Case A: D oes a New Drug Affect Blood Pressure?

Imagine a pharmaceutical company has developed a new drug that they believe can effectively lower blood pressure in patients with hypertension. Before bringing the drug to market, they need to conduct a study to assess its impact on blood pressure.

- Before Treatment: 120, 122, 118, 130, 125, 128, 115, 121, 123, 119

- After Treatment: 115, 120, 112, 128, 122, 125, 110, 117, 119, 114

Step 1 : Define the Hypothesis

- Null Hypothesis : (H 0 )The new drug has no effect on blood pressure.

- Alternate Hypothesis : (H 1 )The new drug has an effect on blood pressure.

Step 2: Define the Significance level

Let’s consider the Significance level at 0.05, indicating rejection of the null hypothesis.

If the evidence suggests less than a 5% chance of observing the results due to random variation.

Step 3 : Compute the test statistic

Using paired T-test analyze the data to obtain a test statistic and a p-value.

The test statistic (e.g., T-statistic) is calculated based on the differences between blood pressure measurements before and after treatment.

t = m/(s/√n)

- m = mean of the difference i.e X after, X before

- s = standard deviation of the difference (d) i.e d i = X after, i − X before,

- n = sample size,

then, m= -3.9, s= 1.8 and n= 10

we, calculate the , T-statistic = -9 based on the formula for paired t test

Step 4: Find the p-value

The calculated t-statistic is -9 and degrees of freedom df = 9, you can find the p-value using statistical software or a t-distribution table.

thus, p-value = 8.538051223166285e-06

Step 5: Result

- If the p-value is less than or equal to 0.05, the researchers reject the null hypothesis.

- If the p-value is greater than 0.05, they fail to reject the null hypothesis.

Conclusion: Since the p-value (8.538051223166285e-06) is less than the significance level (0.05), the researchers reject the null hypothesis. There is statistically significant evidence that the average blood pressure before and after treatment with the new drug is different.

Python Implementation of Case A

Let’s create hypothesis testing with python, where we are testing whether a new drug affects blood pressure. For this example, we will use a paired T-test. We’ll use the scipy.stats library for the T-test.

Scipy is a mathematical library in Python that is mostly used for mathematical equations and computations.

We will implement our first real life problem via python,

T-statistic (from scipy): -9.0 P-value (from scipy): 8.538051223166285e-06 T-statistic (calculated manually): -9.0 Decision: Reject the null hypothesis at alpha=0.05. Conclusion: There is statistically significant evidence that the average blood pressure before and after treatment with the new drug is different.

In the above example, given the T-statistic of approximately -9 and an extremely small p-value, the results indicate a strong case to reject the null hypothesis at a significance level of 0.05.

- The results suggest that the new drug, treatment, or intervention has a significant effect on lowering blood pressure.

- The negative T-statistic indicates that the mean blood pressure after treatment is significantly lower than the assumed population mean before treatment.

Case B : Cholesterol level in a population

Data: A sample of 25 individuals is taken, and their cholesterol levels are measured.

Cholesterol Levels (mg/dL): 205, 198, 210, 190, 215, 205, 200, 192, 198, 205, 198, 202, 208, 200, 205, 198, 205, 210, 192, 205, 198, 205, 210, 192, 205.

Populations Mean = 200

Population Standard Deviation (σ): 5 mg/dL(given for this problem)

Step 1: Define the Hypothesis

- Null Hypothesis (H 0 ): The average cholesterol level in a population is 200 mg/dL.

- Alternate Hypothesis (H 1 ): The average cholesterol level in a population is different from 200 mg/dL.

As the direction of deviation is not given , we assume a two-tailed test, and based on a normal distribution table, the critical values for a significance level of 0.05 (two-tailed) can be calculated through the z-table and are approximately -1.96 and 1.96.

The test statistic is calculated by using the z formula Z = [Tex](203.8 – 200) / (5 \div \sqrt{25}) [/Tex] and we get accordingly , Z =2.039999999999992.

Step 4: Result

Since the absolute value of the test statistic (2.04) is greater than the critical value (1.96), we reject the null hypothesis. And conclude that, there is statistically significant evidence that the average cholesterol level in the population is different from 200 mg/dL

Python Implementation of Case B

Reject the null hypothesis. There is statistically significant evidence that the average cholesterol level in the population is different from 200 mg/dL.

Although hypothesis testing is a useful technique in data science , it does not offer a comprehensive grasp of the topic being studied.

- Lack of Comprehensive Insight : Hypothesis testing in data science often focuses on specific hypotheses, which may not fully capture the complexity of the phenomena being studied.

- Dependence on Data Quality : The accuracy of hypothesis testing results relies heavily on the quality of available data. Inaccurate data can lead to incorrect conclusions, particularly in hypothesis testing in machine learning .

- Overlooking Patterns : Sole reliance on hypothesis testing can result in the omission of significant patterns or relationships in the data that are not captured by the tested hypotheses.

- Contextual Limitations : Hypothesis testing in statistics may not reflect the broader context, leading to oversimplification of results.

- Complementary Methods Needed : To gain a more holistic understanding, it’s essential to complement hypothesis testing with other analytical approaches, especially in data analytics and data mining .

- Misinterpretation Risks : Poorly formulated hypotheses or inappropriate statistical methods can lead to misinterpretation, emphasizing the need for careful consideration in hypothesis testing in Python and related analyses.

- Multiple Hypothesis Testing Challenges : Multiple hypothesis testing in machine learning poses additional challenges, as it can increase the likelihood of Type I errors, requiring adjustments to maintain validity.

Hypothesis testing is a cornerstone of statistical analysis , allowing data scientists to navigate uncertainties and draw credible inferences from sample data. By defining null and alternative hypotheses, selecting significance levels, and employing statistical tests, researchers can validate their assumptions effectively.

This article emphasizes the distinction between Type I and Type II errors, highlighting their relevance in hypothesis testing in data science and machine learning . A practical example involving a paired T-test to assess a new drug’s effect on blood pressure underscores the importance of statistical rigor in data-driven decision-making .

Ultimately, understanding hypothesis testing in statistics , alongside its applications in data mining , data analytics , and hypothesis testing in Python , enhances analytical frameworks and supports informed decision-making.

Understanding Hypothesis Testing- FAQs

What is hypothesis testing in data science.

In data science, hypothesis testing is used to validate assumptions or claims about data. It helps data scientists determine whether observed patterns are statistically significant or could have occurred by chance.

How does hypothesis testing work in machine learning?

In machine learning, hypothesis testing helps assess the effectiveness of models. For example, it can be used to compare the performance of different algorithms or to evaluate whether a new feature significantly improves a model’s accuracy.

What is hypothesis testing in ML?

Statistical method to evaluate the performance and validity of machine learning models. Tests specific hypotheses about model behavior, like whether features influence predictions or if a model generalizes well to unseen data.

What is the difference between Pytest and hypothesis in Python?

Pytest purposes general testing framework for Python code while Hypothesis is a Property-based testing framework for Python, focusing on generating test cases based on specified properties of the code.

What is the difference between hypothesis testing and data mining?

Hypothesis testing focuses on evaluating specific claims or hypotheses about a dataset, while data mining involves exploring large datasets to discover patterns, relationships, or insights without predefined hypotheses.

How is hypothesis generation used in business analytics?

In business analytics , hypothesis generation involves formulating assumptions or predictions based on available data. These hypotheses can then be tested using statistical methods to inform decision-making and strategy.

What is the significance level in hypothesis testing?

The significance level, often denoted as alpha (α), is the threshold for deciding whether to reject the null hypothesis. Common significance levels are 0.05, 0.01, and 0.10, indicating the probability of making a Type I error in statistical hypothesis testing .

Similar Reads

- Data Analysis with Python In this article, we will discuss how to do data analysis with Python. We will discuss all sorts of data analysis i.e. analyzing numerical data with NumPy, Tabular data with Pandas, data visualization Matplotlib, and Exploratory data analysis. Data Analysis With Python Data Analysis is the technique 15+ min read

Introduction to Data Analysis

- What is Data Analysis? Data analysis is an essential aspect of modern decision-making processes across various sectors, including business, healthcare, finance, and academia. As organizations generate massive amounts of data daily, understanding how to extract meaningful insights from this data becomes crucial. In this ar 13 min read

- Data Analytics and its type Data analytics is an important field that involves the process of collecting, processing, and interpreting data to uncover insights and help in making decisions. Data analytics is the practice of examining raw data to identify trends, draw conclusions, and extract meaningful information. This involv 9 min read

- How to Install Numpy on Windows? Python NumPy is a general-purpose array processing package that provides tools for handling n-dimensional arrays. It provides various computing tools such as comprehensive mathematical functions, and linear algebra routines. NumPy provides both the flexibility of Python and the speed of well-optimiz 3 min read

- How to Install Pandas in Python? Pandas in Python is a package that is written for data analysis and manipulation. Pandas offer various operations and data structures to perform numerical data manipulations and time series. Pandas is an open-source library that is built over Numpy libraries. Pandas library is known for its high pro 5 min read

- How to Install Matplotlib on python? Matplotlib is an amazing visualization library in Python for 2D plots of arrays. Matplotlib is a multi-platform data visualization library built on NumPy arrays and designed to work with the broader SciPy stack. In this article, we will look into the various process of installing Matplotlib on Windo 2 min read

- How to Install Python Tensorflow in Windows? Tensorflow is a free and open-source software library used to do computational mathematics to build machine learning models more profoundly deep learning models. It is a product of Google built by Google’s brain team, hence it provides a vast range of operations performance with ease that is compati 3 min read

Data Analysis Libraries

- Pandas Tutorial Pandas is an open-source library that is built on top of NumPy library. Pandas is mainly popular for importing and analyzing data much easier. Pandas is a Python library for data analysis and manipulation, with high-level data structures like Series and DataFrame.Pandas ensures compatibility with nu 5 min read

- NumPy Tutorial - Python Library NumPy is a general-purpose array-processing Python library which provides handy methods/functions for working n-dimensional arrays. NumPy is a short form for "Numerical Python". It provides various computing tools such as comprehensive mathematical functions, and linear algebra routines. NumPy provi 8 min read

- Data Analysis with SciPy Scipy is a Python library useful for solving many mathematical equations and algorithms. It is designed on the top of Numpy library that gives more extension of finding scientific mathematical formulae like Matrix Rank, Inverse, polynomial equations, LU Decomposition, etc. Using its high-level funct 6 min read

- Introduction to TensorFlow TensorFlow is an open-source machine learning library developed by Google. TensorFlow is used to build and train deep learning models as it facilitates the creation of computational graphs and efficient execution on various hardware platforms. The article provides an comprehensive overview of tensor 11 min read

Data Visulization Libraries

- Matplotlib Tutorial Matplotlib is easy to use and an amazing visualizing library in Python. It is built on NumPy arrays and designed to work with the broader SciPy stack and consists of several plots like line, bar, scatter, histogram, etc. In this article, you'll gain a comprehensive understanding of the diverse range 8 min read

- Python Seaborn Tutorial Seaborn is a library mostly used for statistical plotting in Python. It is built on top of Matplotlib and provides beautiful default styles and color palettes to make statistical plots more attractive. In this tutorial, we will learn about Python Seaborn from basics to advance using a huge dataset o 15+ min read

- Plotly tutorial Plotly library in Python is an open-source library that can be used for data visualization and understanding data simply and easily. Plotly supports various types of plots like line charts, scatter plots, histograms, box plots, etc. So you all must be wondering why Plotly is over other visualization 15+ min read

- Introduction to Bokeh in Python Bokeh is a Python interactive data visualization. Unlike Matplotlib and Seaborn, Bokeh renders its plots using HTML and JavaScript. It targets modern web browsers for presentation providing elegant, concise construction of novel graphics with high-performance interactivity. Features of Bokeh: Some o 1 min read

Exploratory Data Analysis (EDA)

- Univariate, Bivariate and Multivariate data and its analysis In this article,we will be discussing univariate, bivariate, and multivariate data and their analysis. Univariate data: Univariate data refers to a type of data in which each observation or data point corresponds to a single variable. In other words, it involves the measurement or observation of a s 5 min read

- Measures of Central Tendency in Statistics Central Tendencies in Statistics are the numerical values that are used to represent mid-value or central value a large collection of numerical data. These obtained numerical values are called central or average values in Statistics. A central or average value of any statistical data or series is th 10 min read

- Measures of Spread - Range, Variance, and Standard Deviation Collecting the data and representing it in form of tables, graphs, and other distributions is essential for us. But, it is also essential that we get a fair idea about how the data is distributed, how scattered it is, and what is the mean of the data. The measures of the mean are not enough to descr 9 min read

- Interquartile Range and Quartile Deviation using NumPy and SciPy In statistical analysis, understanding the spread or variability of a dataset is crucial for gaining insights into its distribution and characteristics. Two common measures used for quantifying this variability are the interquartile range (IQR) and quartile deviation. Quartiles Quartiles are a kind 6 min read

- Anova Formula ANOVA Test, or Analysis of Variance, is a statistical method used to test the differences between means of two or more groups. Developed by Ronald Fisher in the early 20th century, ANOVA helps determine whether there are any statistically significant differences between the means of three or more in 7 min read

- Skewness of Statistical Data Skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. In simpler terms, it indicates whether the data is concentrated more on one side of the mean compared to the other side. Why is skewness important?Understanding the skewness of dat 5 min read

- How to Calculate Skewness and Kurtosis in Python? Skewness is a statistical term and it is a way to estimate or measure the shape of a distribution. It is an important statistical methodology that is used to estimate the asymmetrical behavior rather than computing frequency distribution. Skewness can be two types: Symmetrical: A distribution can be 3 min read

- Difference Between Skewness and Kurtosis What is Skewness? Skewness is an important statistical technique that helps to determine the asymmetrical behavior of the frequency distribution, or more precisely, the lack of symmetry of tails both left and right of the frequency curve. A distribution or dataset is symmetric if it looks the same t 4 min read

- Histogram | Meaning, Example, Types and Steps to Draw What is Histogram?A histogram is a graphical representation of the frequency distribution of continuous series using rectangles. The x-axis of the graph represents the class interval, and the y-axis shows the various frequencies corresponding to different class intervals. A histogram is a two-dimens 5 min read

- Interpretations of Histogram Histograms helps visualizing and comprehending the data distribution. The article aims to provide comprehensive overview of histogram and its interpretation. What is Histogram?Histograms are graphical representations of data distributions. They consist of bars, each representing the frequency or cou 7 min read

- Box Plot Box Plot is a graphical method to visualize data distribution for gaining insights and making informed decisions. Box plot is a type of chart that depicts a group of numerical data through their quartiles. In this article, we are going to discuss components of a box plot, how to create a box plot, u 7 min read

- Quantile Quantile plots The quantile-quantile( q-q plot) plot is a graphical method for determining if a dataset follows a certain probability distribution or whether two samples of data came from the same population or not. Q-Q plots are particularly useful for assessing whether a dataset is normally distributed or if it 8 min read

- What is Univariate, Bivariate & Multivariate Analysis in Data Visualisation? Data Visualisation is a graphical representation of information and data. By using different visual elements such as charts, graphs, and maps data visualization tools provide us with an accessible way to find and understand hidden trends and patterns in data. In this article, we are going to see abo 3 min read

- Using pandas crosstab to create a bar plot In this article, we will discuss how to create a bar plot by using pandas crosstab in Python. First Lets us know more about the crosstab, It is a simple cross-tabulation of two or more variables. What is cross-tabulation? It is a simple cross-tabulation that help us to understand the relationship be 3 min read

- Exploring Correlation in Python This article aims to give a better understanding of a very important technique of multivariate exploration. A correlation Matrix is basically a covariance matrix. Also known as the auto-covariance matrix, dispersion matrix, variance matrix, or variance-covariance matrix. It is a matrix in which the 4 min read

- Covariance and Correlation Covariance and correlation are the two key concepts in Statistics that help us analyze the relationship between two variables. Covariance measures how two variables change together, indicating whether they move in the same or opposite directions. In this article, we will learn about the differences 6 min read

- Factor Analysis | Data Analysis Factor analysis is a statistical method used to analyze the relationships among a set of observed variables by explaining the correlations or covariances between them in terms of a smaller number of unobserved variables called factors. Table of Content What is Factor Analysis?What does Factor mean i 13 min read

- Data Mining - Cluster Analysis INTRODUCTION: Cluster analysis, also known as clustering, is a method of data mining that groups similar data points together. The goal of cluster analysis is to divide a dataset into groups (or clusters) such that the data points within each group are more similar to each other than to data points 8 min read

- MANOVA Test in R Programming Multivariate analysis of variance (MANOVA) is simply an ANOVA (Analysis of variance) with several dependent variables. It is a continuation of the ANOVA. In an ANOVA, we test for statistical differences on one continuous dependent variable by an independent grouping variable. The MANOVA continues th 4 min read

- Python - Central Limit Theorem Central Limit Theorem (CLT) is a foundational principle in statistics, and implementing it using Python can significantly enhance data analysis capabilities. Statistics is an important part of data science projects. We use statistical tools whenever we want to make any inference about the population 7 min read

- Probability Distribution Function Probability Distribution refers to the function that gives the probability of all possible values of a random variable.It shows how the probabilities are assigned to the different possible values of the random variable.Common types of probability distributions Include: Binomial Distribution.Bernoull 9 min read

- Probability Density Estimation & Maximum Likelihood Estimation Probability density and maximum likelihood estimation (MLE) are key ideas in statistics that help us make sense of data. Probability Density Function (PDF) tells us how likely different outcomes are for a continuous variable, while Maximum Likelihood Estimation helps us find the best-fitting model f 8 min read

- Exponential Distribution in R Programming - dexp(), pexp(), qexp(), and rexp() Functions The exponential distribution in R Language is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. In R Programming Langu 2 min read

- Mathematics | Probability Distributions Set 4 (Binomial Distribution) The previous articles talked about some of the Continuous Probability Distributions. This article covers one of the distributions which are not continuous but discrete, namely the Binomial Distribution. Introduction - To understand the Binomial distribution, we must first understand what a Bernoulli 5 min read

- Poisson Distribution | Definition, Formula, Table and Examples The Poisson distribution is a type of discrete probability distribution that calculates the likelihood of a certain number of events happening in a fixed time or space, assuming the events occur independently and at a constant rate. It is characterized by a single parameter, λ (lambda), which repres 11 min read

- P-Value: Comprehensive Guide to Understand, Apply, and Interpret A p-value is a statistical metric used to assess a hypothesis by comparing it with observed data. This article delves into the concept of p-value, its calculation, interpretation, and significance. It also explores the factors that influence p-value and highlights its limitations. Table of Content W 12 min read

- Z-Score in Statistics | Definition, Formula, Calculation and Uses Z-Score in statistics is a measurement of how many standard deviations away a data point is from the mean of a distribution. A z-score of 0 indicates that the data point's score is the same as the mean score. A positive z-score indicates that the data point is above average, while a negative z-score 15+ min read

- How to Calculate Point Estimates in R? Point estimation is a technique used to find the estimate or approximate value of population parameters from a given data sample of the population. The point estimate is calculated for the following two measuring parameters: Measuring parameterPopulation ParameterPoint EstimateProportionπp Meanμx̄ T 3 min read

- Confidence Interval In the realm of statistics, precise estimation is paramount to drawing meaningful insights from data. One of the indispensable tools in this pursuit is the confidence interval. Confidence intervals provide a systematic approach to quantifying the uncertainty associated with sample statistics, offeri 12 min read

- Chi-square test in Machine Learning Chi-Square test is a statistical method crucial for analyzing associations in categorical data. Its applications span various fields, aiding researchers in understanding relationships between factors. This article elucidates Chi-Square types, steps for implementation, and its role in feature selecti 11 min read

- Understanding Hypothesis Testing Hypothesis testing is a fundamental statistical method employed in various fields, including data science, machine learning, and statistics, to make informed decisions based on empirical evidence. It involves formulating assumptions about population parameters using sample statistics and rigorously 15+ min read

Data Preprocessing

- ML | Data Preprocessing in Python In order to derive knowledge and insights from data, the area of data science integrates statistical analysis, machine learning, and computer programming. It entails gathering, purifying, and converting unstructured data into a form that can be analysed and visualised. Data scientists process and an 7 min read

- ML | Overview of Data Cleaning Data cleaning is one of the important parts of machine learning. It plays a significant part in building a model. In this article, we'll understand Data cleaning, its significance and Python implementation. What is Data Cleaning?Data cleaning is a crucial step in the machine learning (ML) pipeline, 15 min read

- ML | Handling Missing Values Missing values are a common issue in machine learning. This occurs when a particular variable lacks data points, resulting in incomplete information and potentially harming the accuracy and dependability of your models. It is essential to address missing values efficiently to ensure strong and impar 12 min read

- Detect and Remove the Outliers using Python Outliers, deviating significantly from the norm, can distort measures of central tendency and affect statistical analyses. The piece explores common causes of outliers, from errors to intentional introduction, and highlights their relevance in outlier mining during data analysis. The article delves 10 min read

Data Transformation

- Data Normalization Machine Learning Normalization is an essential step in the preprocessing of data for machine learning models, and it is a feature scaling technique. Normalization is especially crucial for data manipulation, scaling down, or up the range of data before it is utilized for subsequent stages in the fields of soft compu 9 min read

- Sampling distribution Using Python There are different types of distributions that we study in statistics like normal/gaussian distribution, exponential distribution, binomial distribution, and many others. We will study one such distribution today which is Sampling Distribution. Let's say we have some data then if we sample some fin 3 min read

Time Series Data Analysis

- Data Mining - Time-Series, Symbolic and Biological Sequences Data Data mining refers to extracting or mining knowledge from large amounts of data. In other words, Data mining is the science, art, and technology of discovering large and complex bodies of data in order to discover useful patterns. Theoreticians and practitioners are continually seeking improved tech 3 min read

- Basic DateTime Operations in Python Python has an in-built module named DateTime to deal with dates and times in numerous ways. In this article, we are going to see basic DateTime operations in Python. There are six main object classes with their respective components in the datetime module mentioned below: datetime.datedatetime.timed 12 min read

- Time Series Analysis & Visualization in Python Every dataset has distinct qualities that function as essential aspects in the field of data analytics, providing insightful information about the underlying data. Time series data is one kind of dataset that is especially important. This article delves into the complexities of time series datasets, 11 min read

- How to deal with missing values in a Timeseries in Python? It is common to come across missing values when working with real-world data. Time series data is different from traditional machine learning datasets because it is collected under varying conditions over time. As a result, different mechanisms can be responsible for missing records at different tim 10 min read

- How to calculate MOVING AVERAGE in a Pandas DataFrame? Calculating the moving average in a Pandas DataFrame is used for smoothing time series data and identifying trends. The moving average, also known as the rolling mean, helps reduce noise and highlight significant patterns by averaging data points over a specific window. In Pandas, this can be achiev 7 min read

- What is a trend in time series? Time series data is a sequence of data points that measure some variable over ordered period of time. It is the fastest-growing category of databases as it is widely used in a variety of industries to understand and forecast data patterns. So while preparing this time series data for modeling it's i 3 min read

- How to Perform an Augmented Dickey-Fuller Test in R Augmented Dickey-Fuller Test: It is a common test in statistics and is used to check whether a given time series is at rest. A given time series can be called stationary or at rest if it doesn't have any trend and depicts a constant variance over time and follows autocorrelation structure over a per 3 min read

- AutoCorrelation Autocorrelation is a fundamental concept in time series analysis. Autocorrelation is a statistical concept that assesses the degree of correlation between the values of variable at different time points. The article aims to discuss the fundamentals and working of Autocorrelation. Table of Content Wh 10 min read

Case Studies and Projects

- Top 8 Free Dataset Sources to Use for Data Science Projects Did you think data is only for big companies and corporations to analyze and obtain business insights? No, data is also fun! There is nothing more interesting than analyzing a data set to find the correlations between the data and obtain unique insights. It’s almost like a mystery game where the dat 7 min read

- Step by Step Predictive Analysis - Machine Learning Predictive analytics involves certain manipulations on data from existing data sets with the goal of identifying some new trends and patterns. These trends and patterns are then used to predict future outcomes and trends. By performing predictive analysis, we can predict future trends and performanc 3 min read

- 6 Tips for Creating Effective Data Visualizations The reality of things has completely changed, making data visualization a necessary aspect when you intend to make any decision that impacts your business growth. Data is no longer for data professionals; it now serves as the center of all decisions you make on your daily operations. It's vital to e 6 min read

- Data Science

- data-science

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Hypothesis testing, p values, confidence intervals, and significance.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

- Issues of Concern

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).