Experimental Psychology: 10 Examples & Definition

Dave Cornell (PhD)

Dr. Cornell has worked in education for more than 20 years. His work has involved designing teacher certification for Trinity College in London and in-service training for state governments in the United States. He has trained kindergarten teachers in 8 countries and helped businessmen and women open baby centers and kindergartens in 3 countries.

Learn about our Editorial Process

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

Experimental psychology refers to studying psychological phenomena using scientific methods. Originally, the primary scientific method involved manipulating one variable and observing systematic changes in another variable.

Today, psychologists utilize several types of scientific methodologies.

Experimental psychology examines a wide range of psychological phenomena, including: memory, sensation and perception, cognitive processes, motivation, emotion, developmental processes, in addition to the neurophysiological concomitants of each of these subjects.

Studies are conducted on both animal and human participants, and must comply with stringent requirements and controls regarding the ethical treatment of both.

Definition of Experimental Psychology

Experimental psychology is a branch of psychology that utilizes scientific methods to investigate the mind and behavior.

It involves the systematic and controlled study of human and animal behavior through observation and experimentation .

Experimental psychologists design and conduct experiments to understand cognitive processes, perception, learning, memory, emotion, and many other aspects of psychology. They often manipulate variables ( independent variables ) to see how this affects behavior or mental processes (dependent variables).

The findings from experimental psychology research are often used to better understand human behavior and can be applied in a range of contexts, such as education, health, business, and more.

Experimental Psychology Examples

1. The Puzzle Box Studies (Thorndike, 1898) Placing different cats in a box that can only be escaped by pulling a cord, and then taking detailed notes on how long it took for them to escape allowed Edward Thorndike to derive the Law of Effect: actions followed by positive consequences are more likely to occur again, and actions followed by negative consequences are less likely to occur again (Thorndike, 1898).

2. Reinforcement Schedules (Skinner, 1956) By placing rats in a Skinner Box and changing when and how often the rats are rewarded for pressing a lever, it is possible to identify how each schedule results in different behavior patterns (Skinner, 1956). This led to a wide range of theoretical ideas around how rewards and consequences can shape the behaviors of both animals and humans.

3. Observational Learning (Bandura, 1980) Some children watch a video of an adult punching and kicking a Bobo doll. Other children watch a video in which the adult plays nicely with the doll. By carefully observing the children’s behavior later when in a room with a Bobo doll, researchers can determine if television violence affects children’s behavior (Bandura, 1980).

4. The Fallibility of Memory (Loftus & Palmer, 1974) A group of participants watch the same video of two cars having an accident. Two weeks later, some are asked to estimate the rate of speed the cars were going when they “smashed” into each other. Some participants are asked to estimate the rate of speed the cars were going when they “bumped” into each other. Changing the phrasing of the question changes the memory of the eyewitness.

5. Intrinsic Motivation in the Classroom (Dweck, 1990) To investigate the role of autonomy on intrinsic motivation, half of the students are told they are “free to choose” which tasks to complete. The other half of the students are told they “must choose” some of the tasks. Researchers then carefully observe how long the students engage in the tasks and later ask them some questions about if they enjoyed doing the tasks or not.

6. Systematic Desensitization (Wolpe, 1958) A clinical psychologist carefully documents his treatment of a patient’s social phobia with progressive relaxation. At first, the patient is trained to monitor, tense, and relax various muscle groups while viewing photos of parties. Weeks later, they approach a stranger to ask for directions, initiate a conversation on a crowded bus, and attend a small social gathering. The therapist’s notes are transcribed into a scientific report and published in a peer-reviewed journal.

7. Study of Remembering (Bartlett, 1932) Bartlett’s work is a seminal study in the field of memory, where he used the concept of “schema” to describe an organized pattern of thought or behavior. He conducted a series of experiments using folk tales to show that memory recall is influenced by cultural schemas and personal experiences.

8. Study of Obedience (Milgram, 1963) This famous study explored the conflict between obedience to authority and personal conscience. Milgram found that a majority of participants were willing to administer what they believed were harmful electric shocks to a stranger when instructed by an authority figure, highlighting the power of authority and situational factors in driving behavior.

9. Pavlov’s Dog Study (Pavlov, 1927) Ivan Pavlov, a Russian physiologist, conducted a series of experiments that became a cornerstone in the field of experimental psychology. Pavlov noticed that dogs would salivate when they saw food. He then began to ring a bell each time he presented the food to the dogs. After a while, the dogs began to salivate merely at the sound of the bell. This experiment demonstrated the principle of “classical conditioning.”

10, Piaget’s Stages of Development (Piaget, 1958) Jean Piaget proposed a theory of cognitive development in children that consists of four distinct stages: the sensorimotor stage (birth to 2 years), where children learn about the world through their senses and motor activities, through to the the formal operational stage (12 years and beyond), where abstract reasoning and hypothetical thinking develop. Piaget’s theory is an example of experimental psychology as it was developed through systematic observation and experimentation on children’s problem-solving behaviors .

Types of Research Methodologies in Experimental Psychology

Researchers utilize several different types of research methodologies since the early days of Wundt (1832-1920).

1. The Experiment

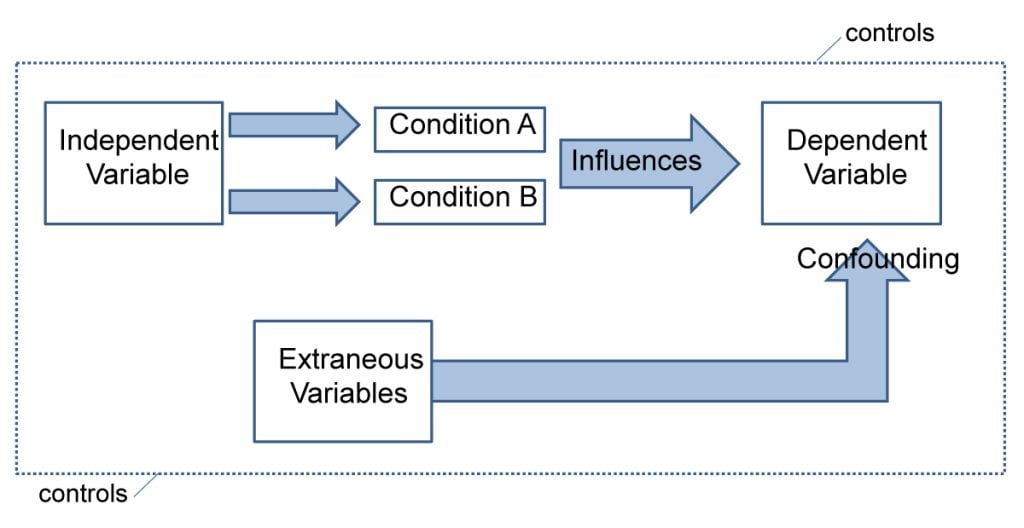

The experiment involves the researcher manipulating the level of one variable, called the Independent Variable (IV), and then observing changes in another variable, called the Dependent Variable (DV).

The researcher is interested in determining if the IV causes changes in the DV. For example, does television violence make children more aggressive?

So, some children in the study, called research participants, will watch a show with TV violence, called the treatment group. Others will watch a show with no TV violence, called the control group.

So, there are two levels of the IV: violence and no violence. Next, children will be observed to see if they act more aggressively. This is the DV.

If TV violence makes children more aggressive, then the children that watched the violent show will me more aggressive than the children that watched the non-violent show.

A key requirement of the experiment is random assignment . Each research participant is assigned to one of the two groups in a way that makes it a completely random process. This means that each group will have a mix of children: different personality types, diverse family backgrounds, and range of intelligence levels.

2. The Longitudinal Study

A longitudinal study involves selecting a sample of participants and then following them for years, or decades, periodically collecting data on the variables of interest.

For example, a researcher might be interested in determining if parenting style affects academic performance of children. Parenting style is called the predictor variable , and academic performance is called the outcome variable .

Researchers will begin by randomly selecting a group of children to be in the study. Then, they will identify the type of parenting practices used when the children are 4 and 5 years old.

A few years later, perhaps when the children are 8 and 9, the researchers will collect data on their grades. This process can be repeated over the next 10 years, including through college.

If parenting style has an effect on academic performance, then the researchers will see a connection between the predictor variable and outcome variable.

Children raised with parenting style X will have higher grades than children raised with parenting style Y.

3. The Case Study

The case study is an in-depth study of one individual. This is a research methodology often used early in the examination of a psychological phenomenon or therapeutic treatment.

For example, in the early days of treating phobias, a clinical psychologist may try teaching one of their patients how to relax every time they see the object that creates so much fear and anxiety, such as a large spider.

The therapist would take very detailed notes on how the teaching process was implemented and the reactions of the patient. When the treatment had been completed, those notes would be written in a scientific form and submitted for publication in a scientific journal for other therapists to learn from.

There are several other types of methodologies available which vary different aspects of the three described above. The researcher will select a methodology that is most appropriate to the phenomenon they want to examine.

They also must take into account various practical considerations such as how much time and resources are needed to complete the study. Conducting research always costs money.

People and equipment are needed to carry-out every study, so researchers often try to obtain funding from their university or a government agency.

Origins and Key Developments in Experimental Psychology

Wilhelm Maximilian Wundt (1832-1920) is considered one of the fathers of modern psychology. He was a physiologist and philosopher and helped establish psychology as a distinct discipline (Khaleefa, 1999).

In 1879 he established the world’s first psychology research lab at the University of Leipzig. This is considered a key milestone for establishing psychology as a scientific discipline. In addition to being the first person to use the term “psychologist,” to describe himself, he also founded the discipline’s first scientific journal Philosphische Studien in 1883.

Another notable figure in the development of experimental psychology is Ernest Weber . Trained as a physician, Weber studied sensation and perception and created the first quantitative law in psychology.

The equation denotes how judgments of sensory differences are relative to previous levels of sensation, referred to as the just-noticeable difference (jnd). This is known today as Weber’s Law (Hergenhahn, 2009).

Gustav Fechner , one of Weber’s students, published the first book on experimental psychology in 1860, titled Elemente der Psychophysik. His worked centered on the measurement of psychophysical facets of sensation and perception, with many of his methods still in use today.

The first American textbook on experimental psychology was Elements of Physiological Psychology, published in 1887 by George Trumball Ladd .

Ladd also established a psychology lab at Yale University, while Stanley Hall and Charles Sanders continued Wundt’s work at a lab at Johns Hopkins University.

In the late 1800s, Charles Pierce’s contribution to experimental psychology is especially noteworthy because he invented the concept of random assignment (Stigler, 1992; Dehue, 1997).

Go Deeper: 15 Random Assignment Examples

This procedure ensures that each participant has an equal chance of being placed in any of the experimental groups (e.g., treatment or control group). This eliminates the influence of confounding factors related to inherent characteristics of the participants.

Random assignment is a fundamental criterion for a study to be considered a valid experiment.

From there, experimental psychology flourished in the 20th century as a science and transformed into an approach utilized in cognitive psychology, developmental psychology, and social psychology .

Today, the term experimental psychology refers to the study of a wide range of phenomena and involves methodologies not limited to the manipulation of variables.

The Scientific Process and Experimental Psychology

The one thing that makes psychology a science and distinguishes it from its roots in philosophy is the reliance upon the scientific process to answer questions. This makes psychology a science was the main goal of its earliest founders such as Wilhelm Wundt.

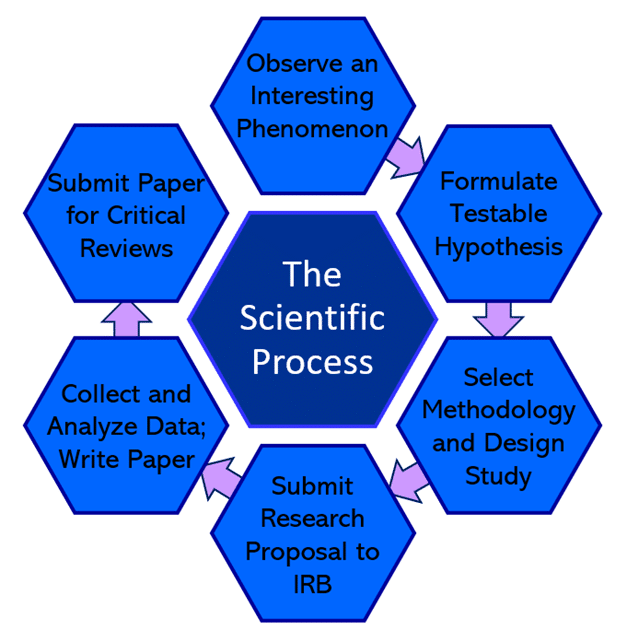

There are numerous steps in the scientific process, outlined in the graphic below.

1. Observation

First, the scientist observes an interesting phenomenon that sparks a question. For example, are the memories of eyewitnesses really reliable, or are they subject to bias or unintentional manipulation?

2. Hypothesize

Next, this question is converted into a testable hypothesis. For instance: the words used to question a witness can influence what they think they remember.

3. Devise a Study

Then the researcher(s) select a methodology that will allow them to test that hypothesis. In this case, the researchers choose the experiment, which will involve randomly assigning some participants to different conditions.

In one condition, participants are asked a question that implies a certain memory (treatment group), while other participants are asked a question which is phrased neutrally and does not imply a certain memory (control group).

The researchers then write a proposal that describes in detail the procedures they want to use, how participants will be selected, and the safeguards they will employ to ensure the rights of the participants.

That proposal is submitted to an Institutional Review Board (IRB). The IRB is comprised of a panel of researchers, community representatives, and other professionals that are responsible for reviewing all studies involving human participants.

4. Conduct the Study

If the IRB accepts the proposal, then the researchers may begin collecting data. After the data has been collected, it is analyzed using a software program such as SPSS.

Those analyses will either support or reject the hypothesis. That is, either the participants’ memories were affected by the wording of the question, or not.

5. Publish the study

Finally, the researchers write a paper detailing their procedures and results of the statistical analyses. That paper is then submitted to a scientific journal.

The lead editor of that journal will then send copies of the paper to 3-5 experts in that subject. Each of those experts will read the paper and basically try to find as many things wrong with it as possible. Because they are experts, they are very good at this task.

After reading those critiques, most likely, the editor will send the paper back to the researchers and require that they respond to the criticisms, collect more data, or reject the paper outright.

In some cases, the study was so well-done that the criticisms were minimal and the editor accepts the paper. It then gets published in the scientific journal several months later.

That entire process can easily take 2 years, usually more. But, the findings of that study went through a very rigorous process. This means that we can have substantial confidence that the conclusions of the study are valid.

Experimental psychology refers to utilizing a scientific process to investigate psychological phenomenon.

There are a variety of methods employed today. They are used to study a wide range of subjects, including memory, cognitive processes, emotions and the neurophysiological basis of each.

The history of psychology as a science began in the 1800s primarily in Germany. As interest grew, the field expanded to the United States where several influential research labs were established.

As more methodologies were developed, the field of psychology as a science evolved into a prolific scientific discipline that has provided invaluable insights into human behavior.

Bartlett, F. C., & Bartlett, F. C. (1995). Remembering: A study in experimental and social psychology . Cambridge university press.

Dehue, T. (1997). Deception, efficiency, and random groups: Psychology and the gradual origination of the random group design. Isis , 88 (4), 653-673.

Ebbinghaus, H. (2013). Memory: A contribution to experimental psychology. Annals of neurosciences , 20 (4), 155.

Hergenhahn, B. R. (2009). An introduction to the history of psychology. Belmont. CA: Wadsworth Cengage Learning .

Khaleefa, O. (1999). Who is the founder of psychophysics and experimental psychology? American Journal of Islam and Society , 16 (2), 1-26.

Loftus, E. F., & Palmer, J. C. (1974). Reconstruction of auto-mobile destruction : An example of the interaction between language and memory. Journal of Verbal Learning and Verbal behavior , 13, 585-589.

Pavlov, I.P. (1927). Conditioned reflexes . Dover, New York.

Piaget, J. (1959). The language and thought of the child (Vol. 5). Psychology Press.

Piaget, J., Fraisse, P., & Reuchlin, M. (2014). Experimental psychology its scope and method: Volume I (Psychology Revivals): History and method . Psychology Press.

Skinner, B. F. (1956). A case history in scientlfic method. American Psychologist, 11 , 221-233

Stigler, S. M. (1992). A historical view of statistical concepts in psychology and educational research. American Journal of Education , 101 (1), 60-70.

Thorndike, E. L. (1898). Animal intelligence: An experimental study of the associative processes in animals. Psychological Review Monograph Supplement 2 .

Wolpe, J. (1958). Psychotherapy by reciprocal inhibition. Stanford, CA: Stanford University Press.

Appendix: Images reproduced as Text

Definition: Experimental psychology is a branch of psychology that focuses on conducting systematic and controlled experiments to study human behavior and cognition.

Overview: Experimental psychology aims to gather empirical evidence and explore cause-and-effect relationships between variables. Experimental psychologists utilize various research methods, including laboratory experiments, surveys, and observations, to investigate topics such as perception, memory, learning, motivation, and social behavior .

Example: The Pavlov’s Dog experimental psychology experiment used scientific methods to develop a theory about how learning and association occur in animals. The same concepts were subsequently used in the study of humans, wherein psychology-based ideas about learning were developed. Pavlov’s use of the empirical evidence was foundational to the study’s success.

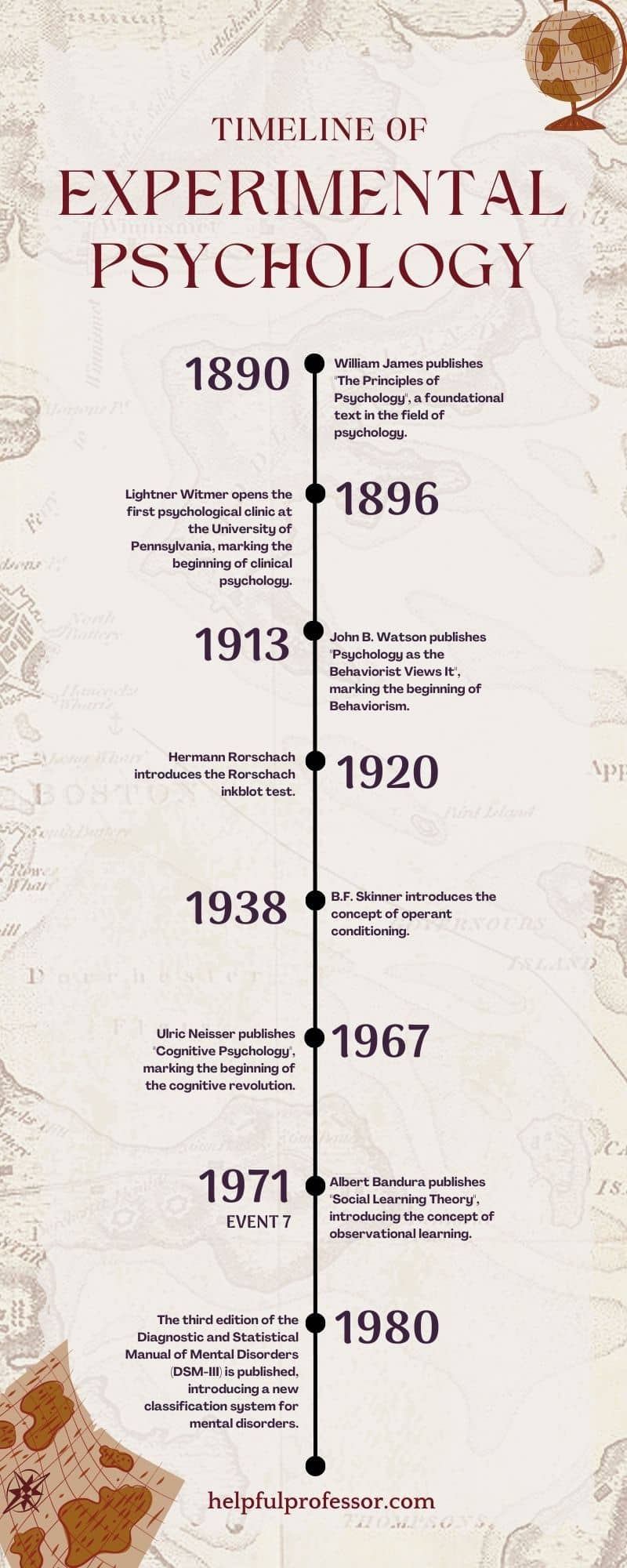

Experimental Psychology Milestones:

1890: William James publishes “The Principles of Psychology”, a foundational text in the field of psychology.

1896: Lightner Witmer opens the first psychological clinic at the University of Pennsylvania, marking the beginning of clinical psychology.

1913: John B. Watson publishes “Psychology as the Behaviorist Views It”, marking the beginning of Behaviorism.

1920: Hermann Rorschach introduces the Rorschach inkblot test.

1938: B.F. Skinner introduces the concept of operant conditioning .

1967: Ulric Neisser publishes “Cognitive Psychology” , marking the beginning of the cognitive revolution.

1980: The third edition of the Diagnostic and Statistical Manual of Mental Disorders (DSM-III) is published, introducing a new classification system for mental disorders.

The Scientific Process

- Observe an interesting phenomenon

- Formulate testable hypothesis

- Select methodology and design study

- Submit research proposal to IRB

- Collect and analyzed data; write paper

- Submit paper for critical reviews

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 23 Achieved Status Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Defense Mechanisms Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 15 Theory of Planned Behavior Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 18 Adaptive Behavior Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 23 Achieved Status Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 15 Ableism Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 25 Defense Mechanisms Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 15 Theory of Planned Behavior Examples

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How the Experimental Method Works in Psychology

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amanda Tust is an editor, fact-checker, and writer with a Master of Science in Journalism from Northwestern University's Medill School of Journalism.

:max_bytes(150000):strip_icc():format(webp)/Amanda-Tust-1000-ffe096be0137462fbfba1f0759e07eb9.jpg)

sturti/Getty Images

The Experimental Process

Types of experiments, potential pitfalls of the experimental method.

The experimental method is a type of research procedure that involves manipulating variables to determine if there is a cause-and-effect relationship. The results obtained through the experimental method are useful but do not prove with 100% certainty that a singular cause always creates a specific effect. Instead, they show the probability that a cause will or will not lead to a particular effect.

At a Glance

While there are many different research techniques available, the experimental method allows researchers to look at cause-and-effect relationships. Using the experimental method, researchers randomly assign participants to a control or experimental group and manipulate levels of an independent variable. If changes in the independent variable lead to changes in the dependent variable, it indicates there is likely a causal relationship between them.

What Is the Experimental Method in Psychology?

The experimental method involves manipulating one variable to determine if this causes changes in another variable. This method relies on controlled research methods and random assignment of study subjects to test a hypothesis.

For example, researchers may want to learn how different visual patterns may impact our perception. Or they might wonder whether certain actions can improve memory . Experiments are conducted on many behavioral topics, including:

The scientific method forms the basis of the experimental method. This is a process used to determine the relationship between two variables—in this case, to explain human behavior .

Positivism is also important in the experimental method. It refers to factual knowledge that is obtained through observation, which is considered to be trustworthy.

When using the experimental method, researchers first identify and define key variables. Then they formulate a hypothesis, manipulate the variables, and collect data on the results. Unrelated or irrelevant variables are carefully controlled to minimize the potential impact on the experiment outcome.

History of the Experimental Method

The idea of using experiments to better understand human psychology began toward the end of the nineteenth century. Wilhelm Wundt established the first formal laboratory in 1879.

Wundt is often called the father of experimental psychology. He believed that experiments could help explain how psychology works, and used this approach to study consciousness .

Wundt coined the term "physiological psychology." This is a hybrid of physiology and psychology, or how the body affects the brain.

Other early contributors to the development and evolution of experimental psychology as we know it today include:

- Gustav Fechner (1801-1887), who helped develop procedures for measuring sensations according to the size of the stimulus

- Hermann von Helmholtz (1821-1894), who analyzed philosophical assumptions through research in an attempt to arrive at scientific conclusions

- Franz Brentano (1838-1917), who called for a combination of first-person and third-person research methods when studying psychology

- Georg Elias Müller (1850-1934), who performed an early experiment on attitude which involved the sensory discrimination of weights and revealed how anticipation can affect this discrimination

Key Terms to Know

To understand how the experimental method works, it is important to know some key terms.

Dependent Variable

The dependent variable is the effect that the experimenter is measuring. If a researcher was investigating how sleep influences test scores, for example, the test scores would be the dependent variable.

Independent Variable

The independent variable is the variable that the experimenter manipulates. In the previous example, the amount of sleep an individual gets would be the independent variable.

A hypothesis is a tentative statement or a guess about the possible relationship between two or more variables. In looking at how sleep influences test scores, the researcher might hypothesize that people who get more sleep will perform better on a math test the following day. The purpose of the experiment, then, is to either support or reject this hypothesis.

Operational definitions are necessary when performing an experiment. When we say that something is an independent or dependent variable, we must have a very clear and specific definition of the meaning and scope of that variable.

Extraneous Variables

Extraneous variables are other variables that may also affect the outcome of an experiment. Types of extraneous variables include participant variables, situational variables, demand characteristics, and experimenter effects. In some cases, researchers can take steps to control for extraneous variables.

Demand Characteristics

Demand characteristics are subtle hints that indicate what an experimenter is hoping to find in a psychology experiment. This can sometimes cause participants to alter their behavior, which can affect the results of the experiment.

Intervening Variables

Intervening variables are factors that can affect the relationship between two other variables.

Confounding Variables

Confounding variables are variables that can affect the dependent variable, but that experimenters cannot control for. Confounding variables can make it difficult to determine if the effect was due to changes in the independent variable or if the confounding variable may have played a role.

Psychologists, like other scientists, use the scientific method when conducting an experiment. The scientific method is a set of procedures and principles that guide how scientists develop research questions, collect data, and come to conclusions.

The five basic steps of the experimental process are:

- Identifying a problem to study

- Devising the research protocol

- Conducting the experiment

- Analyzing the data collected

- Sharing the findings (usually in writing or via presentation)

Most psychology students are expected to use the experimental method at some point in their academic careers. Learning how to conduct an experiment is important to understanding how psychologists prove and disprove theories in this field.

There are a few different types of experiments that researchers might use when studying psychology. Each has pros and cons depending on the participants being studied, the hypothesis, and the resources available to conduct the research.

Lab Experiments

Lab experiments are common in psychology because they allow experimenters more control over the variables. These experiments can also be easier for other researchers to replicate. The drawback of this research type is that what takes place in a lab is not always what takes place in the real world.

Field Experiments

Sometimes researchers opt to conduct their experiments in the field. For example, a social psychologist interested in researching prosocial behavior might have a person pretend to faint and observe how long it takes onlookers to respond.

This type of experiment can be a great way to see behavioral responses in realistic settings. But it is more difficult for researchers to control the many variables existing in these settings that could potentially influence the experiment's results.

Quasi-Experiments

While lab experiments are known as true experiments, researchers can also utilize a quasi-experiment. Quasi-experiments are often referred to as natural experiments because the researchers do not have true control over the independent variable.

A researcher looking at personality differences and birth order, for example, is not able to manipulate the independent variable in the situation (personality traits). Participants also cannot be randomly assigned because they naturally fall into pre-existing groups based on their birth order.

So why would a researcher use a quasi-experiment? This is a good choice in situations where scientists are interested in studying phenomena in natural, real-world settings. It's also beneficial if there are limits on research funds or time.

Field experiments can be either quasi-experiments or true experiments.

Examples of the Experimental Method in Use

The experimental method can provide insight into human thoughts and behaviors, Researchers use experiments to study many aspects of psychology.

A 2019 study investigated whether splitting attention between electronic devices and classroom lectures had an effect on college students' learning abilities. It found that dividing attention between these two mediums did not affect lecture comprehension. However, it did impact long-term retention of the lecture information, which affected students' exam performance.

An experiment used participants' eye movements and electroencephalogram (EEG) data to better understand cognitive processing differences between experts and novices. It found that experts had higher power in their theta brain waves than novices, suggesting that they also had a higher cognitive load.

A study looked at whether chatting online with a computer via a chatbot changed the positive effects of emotional disclosure often received when talking with an actual human. It found that the effects were the same in both cases.

One experimental study evaluated whether exercise timing impacts information recall. It found that engaging in exercise prior to performing a memory task helped improve participants' short-term memory abilities.

Sometimes researchers use the experimental method to get a bigger-picture view of psychological behaviors and impacts. For example, one 2018 study examined several lab experiments to learn more about the impact of various environmental factors on building occupant perceptions.

A 2020 study set out to determine the role that sensation-seeking plays in political violence. This research found that sensation-seeking individuals have a higher propensity for engaging in political violence. It also found that providing access to a more peaceful, yet still exciting political group helps reduce this effect.

While the experimental method can be a valuable tool for learning more about psychology and its impacts, it also comes with a few pitfalls.

Experiments may produce artificial results, which are difficult to apply to real-world situations. Similarly, researcher bias can impact the data collected. Results may not be able to be reproduced, meaning the results have low reliability .

Since humans are unpredictable and their behavior can be subjective, it can be hard to measure responses in an experiment. In addition, political pressure may alter the results. The subjects may not be a good representation of the population, or groups used may not be comparable.

And finally, since researchers are human too, results may be degraded due to human error.

What This Means For You

Every psychological research method has its pros and cons. The experimental method can help establish cause and effect, and it's also beneficial when research funds are limited or time is of the essence.

At the same time, it's essential to be aware of this method's pitfalls, such as how biases can affect the results or the potential for low reliability. Keeping these in mind can help you review and assess research studies more accurately, giving you a better idea of whether the results can be trusted or have limitations.

Colorado State University. Experimental and quasi-experimental research .

American Psychological Association. Experimental psychology studies human and animals .

Mayrhofer R, Kuhbandner C, Lindner C. The practice of experimental psychology: An inevitably postmodern endeavor . Front Psychol . 2021;11:612805. doi:10.3389/fpsyg.2020.612805

Mandler G. A History of Modern Experimental Psychology .

Stanford University. Wilhelm Maximilian Wundt . Stanford Encyclopedia of Philosophy.

Britannica. Gustav Fechner .

Britannica. Hermann von Helmholtz .

Meyer A, Hackert B, Weger U. Franz Brentano and the beginning of experimental psychology: implications for the study of psychological phenomena today . Psychol Res . 2018;82:245-254. doi:10.1007/s00426-016-0825-7

Britannica. Georg Elias Müller .

McCambridge J, de Bruin M, Witton J. The effects of demand characteristics on research participant behaviours in non-laboratory settings: A systematic review . PLoS ONE . 2012;7(6):e39116. doi:10.1371/journal.pone.0039116

Laboratory experiments . In: The Sage Encyclopedia of Communication Research Methods. Allen M, ed. SAGE Publications, Inc. doi:10.4135/9781483381411.n287

Schweizer M, Braun B, Milstone A. Research methods in healthcare epidemiology and antimicrobial stewardship — quasi-experimental designs . Infect Control Hosp Epidemiol . 2016;37(10):1135-1140. doi:10.1017/ice.2016.117

Glass A, Kang M. Dividing attention in the classroom reduces exam performance . Educ Psychol . 2019;39(3):395-408. doi:10.1080/01443410.2018.1489046

Keskin M, Ooms K, Dogru AO, De Maeyer P. Exploring the cognitive load of expert and novice map users using EEG and eye tracking . ISPRS Int J Geo-Inf . 2020;9(7):429. doi:10.3390.ijgi9070429

Ho A, Hancock J, Miner A. Psychological, relational, and emotional effects of self-disclosure after conversations with a chatbot . J Commun . 2018;68(4):712-733. doi:10.1093/joc/jqy026

Haynes IV J, Frith E, Sng E, Loprinzi P. Experimental effects of acute exercise on episodic memory function: Considerations for the timing of exercise . Psychol Rep . 2018;122(5):1744-1754. doi:10.1177/0033294118786688

Torresin S, Pernigotto G, Cappelletti F, Gasparella A. Combined effects of environmental factors on human perception and objective performance: A review of experimental laboratory works . Indoor Air . 2018;28(4):525-538. doi:10.1111/ina.12457

Schumpe BM, Belanger JJ, Moyano M, Nisa CF. The role of sensation seeking in political violence: An extension of the significance quest theory . J Personal Social Psychol . 2020;118(4):743-761. doi:10.1037/pspp0000223

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

- Privacy Policy

Home » Experimental Design – Types, Methods, Guide

Experimental Design – Types, Methods, Guide

Table of Contents

Experimental design is a structured approach used to conduct scientific experiments. It enables researchers to explore cause-and-effect relationships by controlling variables and testing hypotheses. This guide explores the types of experimental designs, common methods, and best practices for planning and conducting experiments.

Experimental Design

Experimental design refers to the process of planning a study to test a hypothesis, where variables are manipulated to observe their effects on outcomes. By carefully controlling conditions, researchers can determine whether specific factors cause changes in a dependent variable.

Key Characteristics of Experimental Design :

- Manipulation of Variables : The researcher intentionally changes one or more independent variables.

- Control of Extraneous Factors : Other variables are kept constant to avoid interference.

- Randomization : Subjects are often randomly assigned to groups to reduce bias.

- Replication : Repeating the experiment or having multiple subjects helps verify results.

Purpose of Experimental Design

The primary purpose of experimental design is to establish causal relationships by controlling for extraneous factors and reducing bias. Experimental designs help:

- Test Hypotheses : Determine if there is a significant effect of independent variables on dependent variables.

- Control Confounding Variables : Minimize the impact of variables that could distort results.

- Generate Reproducible Results : Provide a structured approach that allows other researchers to replicate findings.

Types of Experimental Designs

Experimental designs can vary based on the number of variables, the assignment of participants, and the purpose of the experiment. Here are some common types:

1. Pre-Experimental Designs

These designs are exploratory and lack random assignment, often used when strict control is not feasible. They provide initial insights but are less rigorous in establishing causality.

- Example : A training program is provided, and participants’ knowledge is tested afterward, without a pretest.

- Example : A group is tested on reading skills, receives instruction, and is tested again to measure improvement.

2. True Experimental Designs

True experiments involve random assignment of participants to control or experimental groups, providing high levels of control over variables.

- Example : A new drug’s efficacy is tested with patients randomly assigned to receive the drug or a placebo.

- Example : Two groups are observed after one group receives a treatment, and the other receives no intervention.

3. Quasi-Experimental Designs

Quasi-experiments lack random assignment but still aim to determine causality by comparing groups or time periods. They are often used when randomization isn’t possible, such as in natural or field experiments.

- Example : Schools receive different curriculums, and students’ test scores are compared before and after implementation.

- Example : Traffic accident rates are recorded for a city before and after a new speed limit is enforced.

4. Factorial Designs

Factorial designs test the effects of multiple independent variables simultaneously. This design is useful for studying the interactions between variables.

- Example : Studying how caffeine (variable 1) and sleep deprivation (variable 2) affect memory performance.

- Example : An experiment studying the impact of age, gender, and education level on technology usage.

5. Repeated Measures Design

In repeated measures designs, the same participants are exposed to different conditions or treatments. This design is valuable for studying changes within subjects over time.

- Example : Measuring reaction time in participants before, during, and after caffeine consumption.

- Example : Testing two medications, with each participant receiving both but in a different sequence.

Methods for Implementing Experimental Designs

- Purpose : Ensures each participant has an equal chance of being assigned to any group, reducing selection bias.

- Method : Use random number generators or assignment software to allocate participants randomly.

- Purpose : Prevents participants or researchers from knowing which group (experimental or control) participants belong to, reducing bias.

- Method : Implement single-blind (participants unaware) or double-blind (both participants and researchers unaware) procedures.

- Purpose : Provides a baseline for comparison, showing what would happen without the intervention.

- Method : Include a group that does not receive the treatment but otherwise undergoes the same conditions.

- Purpose : Controls for order effects in repeated measures designs by varying the order of treatments.

- Method : Assign different sequences to participants, ensuring that each condition appears equally across orders.

- Purpose : Ensures reliability by repeating the experiment or including multiple participants within groups.

- Method : Increase sample size or repeat studies with different samples or in different settings.

Steps to Conduct an Experimental Design

- Clearly state what you intend to discover or prove through the experiment. A strong hypothesis guides the experiment’s design and variable selection.

- Independent Variable (IV) : The factor manipulated by the researcher (e.g., amount of sleep).

- Dependent Variable (DV) : The outcome measured (e.g., reaction time).

- Control Variables : Factors kept constant to prevent interference with results (e.g., time of day for testing).

- Choose a design type that aligns with your research question, hypothesis, and available resources. For example, an RCT for a medical study or a factorial design for complex interactions.

- Randomly assign participants to experimental or control groups. Ensure control groups are similar to experimental groups in all respects except for the treatment received.

- Randomize the assignment and, if possible, apply blinding to minimize potential bias.

- Follow a consistent procedure for each group, collecting data systematically. Record observations and manage any unexpected events or variables that may arise.

- Use appropriate statistical methods to test for significant differences between groups, such as t-tests, ANOVA, or regression analysis.

- Determine whether the results support your hypothesis and analyze any trends, patterns, or unexpected findings. Discuss possible limitations and implications of your results.

Examples of Experimental Design in Research

- Medicine : Testing a new drug’s effectiveness through a randomized controlled trial, where one group receives the drug and another receives a placebo.

- Psychology : Studying the effect of sleep deprivation on memory using a within-subject design, where participants are tested with different sleep conditions.

- Education : Comparing teaching methods in a quasi-experimental design by measuring students’ performance before and after implementing a new curriculum.

- Marketing : Using a factorial design to examine the effects of advertisement type and frequency on consumer purchase behavior.

- Environmental Science : Testing the impact of a pollution reduction policy through a time series design, recording pollution levels before and after implementation.

Experimental design is fundamental to conducting rigorous and reliable research, offering a systematic approach to exploring causal relationships. With various types of designs and methods, researchers can choose the most appropriate setup to answer their research questions effectively. By applying best practices, controlling variables, and selecting suitable statistical methods, experimental design supports meaningful insights across scientific, medical, and social research fields.

- Campbell, D. T., & Stanley, J. C. (1963). Experimental and Quasi-Experimental Designs for Research . Houghton Mifflin Company.

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference . Houghton Mifflin.

- Fisher, R. A. (1935). The Design of Experiments . Oliver and Boyd.

- Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics . Sage Publications.

- Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences . Routledge.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Methods – Types, Examples and Guide

Ethnographic Research -Types, Methods and Guide

Survey Research – Types, Methods, Examples

Triangulation in Research – Types, Methods and...

Correlational Research – Methods, Types and...

Qualitative Research – Methods, Analysis Types...

Research Methods In Psychology

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

Research methods in psychology are systematic procedures used to observe, describe, predict, and explain behavior and mental processes. They include experiments, surveys, case studies, and naturalistic observations, ensuring data collection is objective and reliable to understand and explain psychological phenomena.

Hypotheses are statements about the prediction of the results, that can be verified or disproved by some investigation.

There are four types of hypotheses :

- Null Hypotheses (H0 ) – these predict that no difference will be found in the results between the conditions. Typically these are written ‘There will be no difference…’

- Alternative Hypotheses (Ha or H1) – these predict that there will be a significant difference in the results between the two conditions. This is also known as the experimental hypothesis.

- One-tailed (directional) hypotheses – these state the specific direction the researcher expects the results to move in, e.g. higher, lower, more, less. In a correlation study, the predicted direction of the correlation can be either positive or negative.

- Two-tailed (non-directional) hypotheses – these state that a difference will be found between the conditions of the independent variable but does not state the direction of a difference or relationship. Typically these are always written ‘There will be a difference ….’

All research has an alternative hypothesis (either a one-tailed or two-tailed) and a corresponding null hypothesis.

Once the research is conducted and results are found, psychologists must accept one hypothesis and reject the other.

So, if a difference is found, the Psychologist would accept the alternative hypothesis and reject the null. The opposite applies if no difference is found.

Sampling techniques

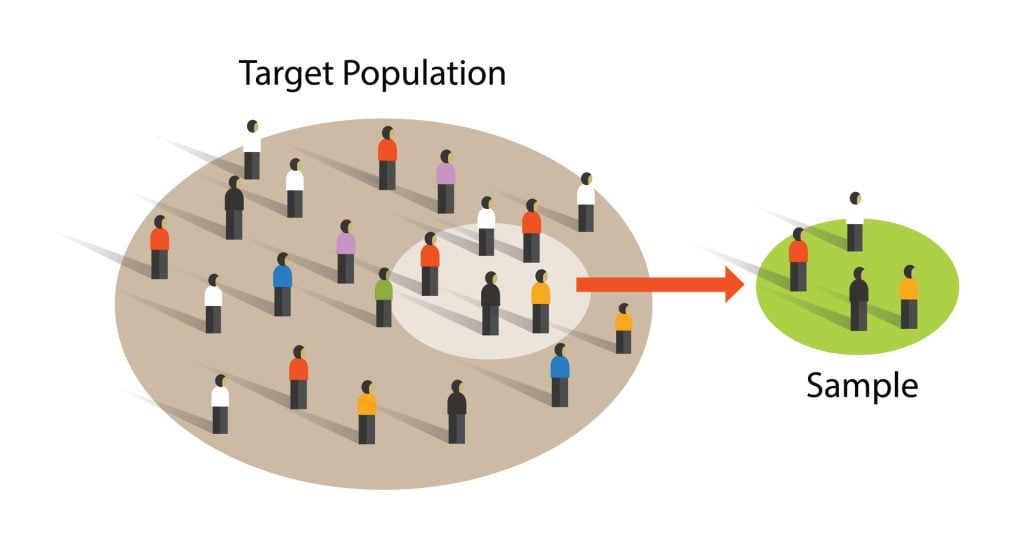

Sampling is the process of selecting a representative group from the population under study.

A sample is the participants you select from a target population (the group you are interested in) to make generalizations about.

Representative means the extent to which a sample mirrors a researcher’s target population and reflects its characteristics.

Generalisability means the extent to which their findings can be applied to the larger population of which their sample was a part.

- Volunteer sample : where participants pick themselves through newspaper adverts, noticeboards or online.

- Opportunity sampling : also known as convenience sampling , uses people who are available at the time the study is carried out and willing to take part. It is based on convenience.

- Random sampling : when every person in the target population has an equal chance of being selected. An example of random sampling would be picking names out of a hat.

- Systematic sampling : when a system is used to select participants. Picking every Nth person from all possible participants. N = the number of people in the research population / the number of people needed for the sample.

- Stratified sampling : when you identify the subgroups and select participants in proportion to their occurrences.

- Snowball sampling : when researchers find a few participants, and then ask them to find participants themselves and so on.

- Quota sampling : when researchers will be told to ensure the sample fits certain quotas, for example they might be told to find 90 participants, with 30 of them being unemployed.

Experiments always have an independent and dependent variable .

- The independent variable is the one the experimenter manipulates (the thing that changes between the conditions the participants are placed into). It is assumed to have a direct effect on the dependent variable.

- The dependent variable is the thing being measured, or the results of the experiment.

Operationalization of variables means making them measurable/quantifiable. We must use operationalization to ensure that variables are in a form that can be easily tested.

For instance, we can’t really measure ‘happiness’, but we can measure how many times a person smiles within a two-hour period.

By operationalizing variables, we make it easy for someone else to replicate our research. Remember, this is important because we can check if our findings are reliable.

Extraneous variables are all variables which are not independent variable but could affect the results of the experiment.

It can be a natural characteristic of the participant, such as intelligence levels, gender, or age for example, or it could be a situational feature of the environment such as lighting or noise.

Demand characteristics are a type of extraneous variable that occurs if the participants work out the aims of the research study, they may begin to behave in a certain way.

For example, in Milgram’s research , critics argued that participants worked out that the shocks were not real and they administered them as they thought this was what was required of them.

Extraneous variables must be controlled so that they do not affect (confound) the results.

Randomly allocating participants to their conditions or using a matched pairs experimental design can help to reduce participant variables.

Situational variables are controlled by using standardized procedures, ensuring every participant in a given condition is treated in the same way

Experimental Design

Experimental design refers to how participants are allocated to each condition of the independent variable, such as a control or experimental group.

- Independent design ( between-groups design ): each participant is selected for only one group. With the independent design, the most common way of deciding which participants go into which group is by means of randomization.

- Matched participants design : each participant is selected for only one group, but the participants in the two groups are matched for some relevant factor or factors (e.g. ability; sex; age).

- Repeated measures design ( within groups) : each participant appears in both groups, so that there are exactly the same participants in each group.

- The main problem with the repeated measures design is that there may well be order effects. Their experiences during the experiment may change the participants in various ways.

- They may perform better when they appear in the second group because they have gained useful information about the experiment or about the task. On the other hand, they may perform less well on the second occasion because of tiredness or boredom.

- Counterbalancing is the best way of preventing order effects from disrupting the findings of an experiment, and involves ensuring that each condition is equally likely to be used first and second by the participants.

If we wish to compare two groups with respect to a given independent variable, it is essential to make sure that the two groups do not differ in any other important way.

Experimental Methods

All experimental methods involve an iv (independent variable) and dv (dependent variable)..

The researcher decides where the experiment will take place, at what time, with which participants, in what circumstances, using a standardized procedure.

- Field experiments are conducted in the everyday (natural) environment of the participants. The experimenter still manipulates the IV, but in a real-life setting. It may be possible to control extraneous variables, though such control is more difficult than in a lab experiment.

- Natural experiments are when a naturally occurring IV is investigated that isn’t deliberately manipulated, it exists anyway. Participants are not randomly allocated, and the natural event may only occur rarely.

Case studies are in-depth investigations of a person, group, event, or community. It uses information from a range of sources, such as from the person concerned and also from their family and friends.

Many techniques may be used such as interviews, psychological tests, observations and experiments. Case studies are generally longitudinal: in other words, they follow the individual or group over an extended period of time.

Case studies are widely used in psychology and among the best-known ones carried out were by Sigmund Freud . He conducted very detailed investigations into the private lives of his patients in an attempt to both understand and help them overcome their illnesses.

Case studies provide rich qualitative data and have high levels of ecological validity. However, it is difficult to generalize from individual cases as each one has unique characteristics.

Correlational Studies

Correlation means association; it is a measure of the extent to which two variables are related. One of the variables can be regarded as the predictor variable with the other one as the outcome variable.

Correlational studies typically involve obtaining two different measures from a group of participants, and then assessing the degree of association between the measures.

The predictor variable can be seen as occurring before the outcome variable in some sense. It is called the predictor variable, because it forms the basis for predicting the value of the outcome variable.

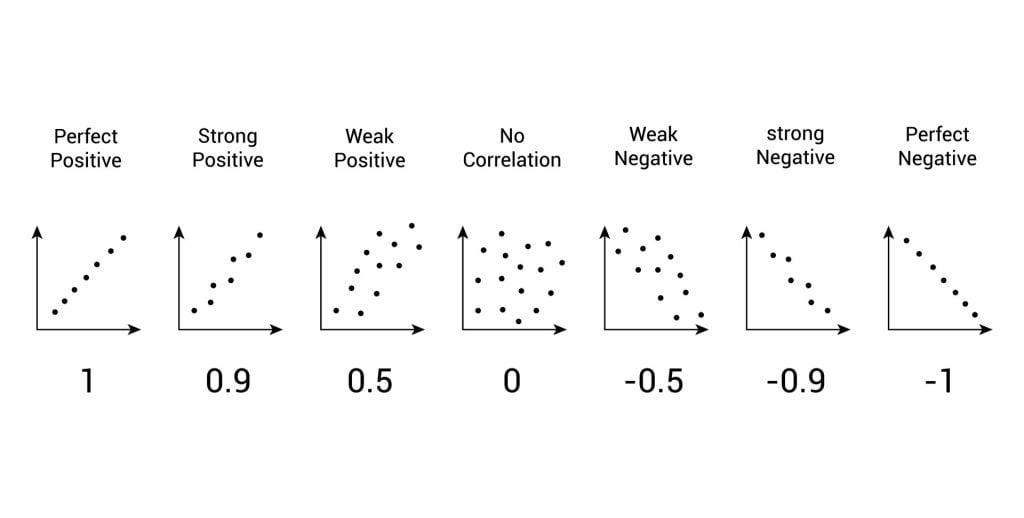

Relationships between variables can be displayed on a graph or as a numerical score called a correlation coefficient.

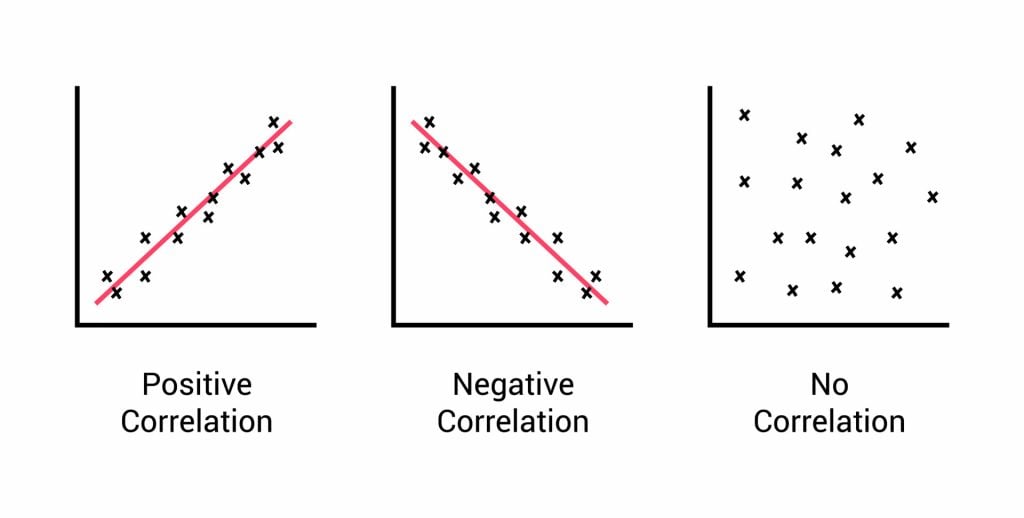

- If an increase in one variable tends to be associated with an increase in the other, then this is known as a positive correlation .

- If an increase in one variable tends to be associated with a decrease in the other, then this is known as a negative correlation .

- A zero correlation occurs when there is no relationship between variables.

After looking at the scattergraph, if we want to be sure that a significant relationship does exist between the two variables, a statistical test of correlation can be conducted, such as Spearman’s rho.

The test will give us a score, called a correlation coefficient . This is a value between 0 and 1, and the closer to 1 the score is, the stronger the relationship between the variables. This value can be both positive e.g. 0.63, or negative -0.63.

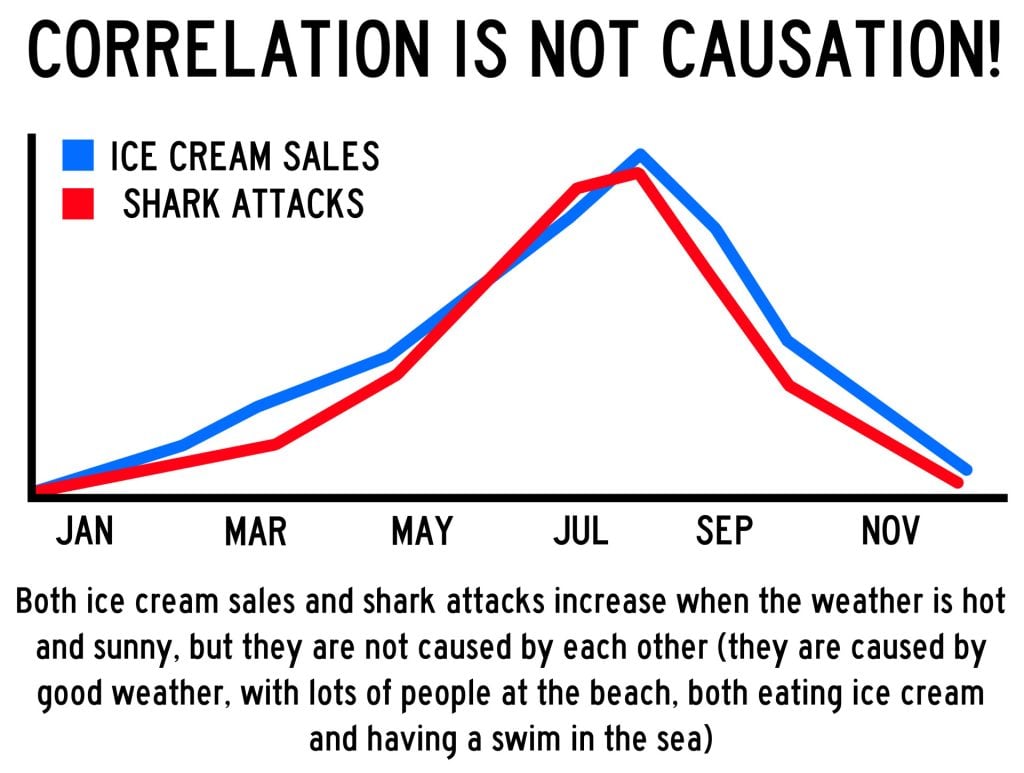

A correlation between variables, however, does not automatically mean that the change in one variable is the cause of the change in the values of the other variable. A correlation only shows if there is a relationship between variables.

Correlation does not always prove causation, as a third variable may be involved.

Interview Methods

Interviews are commonly divided into two types: structured and unstructured.

A fixed, predetermined set of questions is put to every participant in the same order and in the same way.

Responses are recorded on a questionnaire, and the researcher presets the order and wording of questions, and sometimes the range of alternative answers.

The interviewer stays within their role and maintains social distance from the interviewee.

There are no set questions, and the participant can raise whatever topics he/she feels are relevant and ask them in their own way. Questions are posed about participants’ answers to the subject

Unstructured interviews are most useful in qualitative research to analyze attitudes and values.

Though they rarely provide a valid basis for generalization, their main advantage is that they enable the researcher to probe social actors’ subjective point of view.

Questionnaire Method

Questionnaires can be thought of as a kind of written interview. They can be carried out face to face, by telephone, or post.

The choice of questions is important because of the need to avoid bias or ambiguity in the questions, ‘leading’ the respondent or causing offense.

- Open questions are designed to encourage a full, meaningful answer using the subject’s own knowledge and feelings. They provide insights into feelings, opinions, and understanding. Example: “How do you feel about that situation?”

- Closed questions can be answered with a simple “yes” or “no” or specific information, limiting the depth of response. They are useful for gathering specific facts or confirming details. Example: “Do you feel anxious in crowds?”

Its other practical advantages are that it is cheaper than face-to-face interviews and can be used to contact many respondents scattered over a wide area relatively quickly.

Observations

There are different types of observation methods :

- Covert observation is where the researcher doesn’t tell the participants they are being observed until after the study is complete. There could be ethical problems or deception and consent with this particular observation method.

- Overt observation is where a researcher tells the participants they are being observed and what they are being observed for.

- Controlled : behavior is observed under controlled laboratory conditions (e.g., Bandura’s Bobo doll study).

- Natural : Here, spontaneous behavior is recorded in a natural setting.

- Participant : Here, the observer has direct contact with the group of people they are observing. The researcher becomes a member of the group they are researching.

- Non-participant (aka “fly on the wall): The researcher does not have direct contact with the people being observed. The observation of participants’ behavior is from a distance

Pilot Study

A pilot study is a small scale preliminary study conducted in order to evaluate the feasibility of the key s teps in a future, full-scale project.

A pilot study is an initial run-through of the procedures to be used in an investigation; it involves selecting a few people and trying out the study on them. It is possible to save time, and in some cases, money, by identifying any flaws in the procedures designed by the researcher.

A pilot study can help the researcher spot any ambiguities (i.e. unusual things) or confusion in the information given to participants or problems with the task devised.

Sometimes the task is too hard, and the researcher may get a floor effect, because none of the participants can score at all or can complete the task – all performances are low.

The opposite effect is a ceiling effect, when the task is so easy that all achieve virtually full marks or top performances and are “hitting the ceiling”.

Research Design

In cross-sectional research , a researcher compares multiple segments of the population at the same time

Sometimes, we want to see how people change over time, as in studies of human development and lifespan. Longitudinal research is a research design in which data-gathering is administered repeatedly over an extended period of time.

In cohort studies , the participants must share a common factor or characteristic such as age, demographic, or occupation. A cohort study is a type of longitudinal study in which researchers monitor and observe a chosen population over an extended period.

Triangulation means using more than one research method to improve the study’s validity.

Reliability

Reliability is a measure of consistency, if a particular measurement is repeated and the same result is obtained then it is described as being reliable.

- Test-retest reliability : assessing the same person on two different occasions which shows the extent to which the test produces the same answers.

- Inter-observer reliability : the extent to which there is an agreement between two or more observers.

Meta-Analysis

Meta-analysis is a statistical procedure used to combine and synthesize findings from multiple independent studies to estimate the average effect size for a particular research question.

Meta-analysis goes beyond traditional narrative reviews by using statistical methods to integrate the results of several studies, leading to a more objective appraisal of the evidence.

This is done by looking through various databases, and then decisions are made about what studies are to be included/excluded.

- Strengths : Increases the conclusions’ validity as they’re based on a wider range.

- Weaknesses : Research designs in studies can vary, so they are not truly comparable.

Peer Review

A researcher submits an article to a journal. The choice of the journal may be determined by the journal’s audience or prestige.

The journal selects two or more appropriate experts (psychologists working in a similar field) to peer review the article without payment. The peer reviewers assess: the methods and designs used, originality of the findings, the validity of the original research findings and its content, structure and language.

Feedback from the reviewer determines whether the article is accepted. The article may be: Accepted as it is, accepted with revisions, sent back to the author to revise and re-submit or rejected without the possibility of submission.

The editor makes the final decision whether to accept or reject the research report based on the reviewers comments/ recommendations.

Peer review is important because it prevent faulty data from entering the public domain, it provides a way of checking the validity of findings and the quality of the methodology and is used to assess the research rating of university departments.

Peer reviews may be an ideal, whereas in practice there are lots of problems. For example, it slows publication down and may prevent unusual, new work being published. Some reviewers might use it as an opportunity to prevent competing researchers from publishing work.

Some people doubt whether peer review can really prevent the publication of fraudulent research.

The advent of the internet means that a lot of research and academic comment is being published without official peer reviews than before, though systems are evolving on the internet where everyone really has a chance to offer their opinions and police the quality of research.

Types of Data

- Quantitative data is numerical data e.g. reaction time or number of mistakes. It represents how much or how long, how many there are of something. A tally of behavioral categories and closed questions in a questionnaire collect quantitative data.

- Qualitative data is virtually any type of information that can be observed and recorded that is not numerical in nature and can be in the form of written or verbal communication. Open questions in questionnaires and accounts from observational studies collect qualitative data.

- Primary data is first-hand data collected for the purpose of the investigation.

- Secondary data is information that has been collected by someone other than the person who is conducting the research e.g. taken from journals, books or articles.

Validity means how well a piece of research actually measures what it sets out to, or how well it reflects the reality it claims to represent.

Validity is whether the observed effect is genuine and represents what is actually out there in the world.

- Concurrent validity is the extent to which a psychological measure relates to an existing similar measure and obtains close results. For example, a new intelligence test compared to an established test.

- Face validity : does the test measure what it’s supposed to measure ‘on the face of it’. This is done by ‘eyeballing’ the measuring or by passing it to an expert to check.

- Ecological validit y is the extent to which findings from a research study can be generalized to other settings / real life.

- Temporal validity is the extent to which findings from a research study can be generalized to other historical times.

Features of Science

- Paradigm – A set of shared assumptions and agreed methods within a scientific discipline.

- Paradigm shift – The result of the scientific revolution: a significant change in the dominant unifying theory within a scientific discipline.

- Objectivity – When all sources of personal bias are minimised so not to distort or influence the research process.

- Empirical method – Scientific approaches that are based on the gathering of evidence through direct observation and experience.

- Replicability – The extent to which scientific procedures and findings can be repeated by other researchers.

- Falsifiability – The principle that a theory cannot be considered scientific unless it admits the possibility of being proved untrue.

Statistical Testing

A significant result is one where there is a low probability that chance factors were responsible for any observed difference, correlation, or association in the variables tested.

If our test is significant, we can reject our null hypothesis and accept our alternative hypothesis.

If our test is not significant, we can accept our null hypothesis and reject our alternative hypothesis. A null hypothesis is a statement of no effect.

In Psychology, we use p < 0.05 (as it strikes a balance between making a type I and II error) but p < 0.01 is used in tests that could cause harm like introducing a new drug.

A type I error is when the null hypothesis is rejected when it should have been accepted (happens when a lenient significance level is used, an error of optimism).

A type II error is when the null hypothesis is accepted when it should have been rejected (happens when a stringent significance level is used, an error of pessimism).

Ethical Issues

- Informed consent is when participants are able to make an informed judgment about whether to take part. It causes them to guess the aims of the study and change their behavior.

- To deal with it, we can gain presumptive consent or ask them to formally indicate their agreement to participate but it may invalidate the purpose of the study and it is not guaranteed that the participants would understand.

- Deception should only be used when it is approved by an ethics committee, as it involves deliberately misleading or withholding information. Participants should be fully debriefed after the study but debriefing can’t turn the clock back.

- All participants should be informed at the beginning that they have the right to withdraw if they ever feel distressed or uncomfortable.

- It causes bias as the ones that stayed are obedient and some may not withdraw as they may have been given incentives or feel like they’re spoiling the study. Researchers can offer the right to withdraw data after participation.

- Participants should all have protection from harm . The researcher should avoid risks greater than those experienced in everyday life and they should stop the study if any harm is suspected. However, the harm may not be apparent at the time of the study.

- Confidentiality concerns the communication of personal information. The researchers should not record any names but use numbers or false names though it may not be possible as it is sometimes possible to work out who the researchers were.

Reference Library

Collections

- See what's new

- All Resources

- Student Resources

- Assessment Resources

- Teaching Resources

- CPD Courses

- Livestreams

Study notes, videos, interactive activities and more!

Psychology news, insights and enrichment

Currated collections of free resources

Browse resources by topic

- All Psychology Resources

Resource Selections

Currated lists of resources

Study Notes

Types of Experiment: Overview

Last updated 6 Sept 2022

- Share on Facebook

- Share on Twitter

- Share by Email

Different types of methods are used in research, which loosely fall into 1 of 2 categories.

Experimental (Laboratory, Field & Natural) & N on experimental ( correlations, observations, interviews, questionnaires and case studies).

All the three types of experiments have characteristics in common. They all have:

- an independent variable (I.V.) which is manipulated or a naturally occurring variable

- a dependent variable (D.V.) which is measured

- there will be at least two conditions in which participants produce data.

Note – natural and quasi experiments are often used synonymously but are not strictly the same, as with quasi experiments participants cannot be randomly assigned, so rather than there being a condition there is a condition.

Laboratory Experiments

These are conducted under controlled conditions, in which the researcher deliberately changes something (I.V.) to see the effect of this on something else (D.V.).

Control – lab experiments have a high degree of control over the environment & other extraneous variables which means that the researcher can accurately assess the effects of the I.V, so it has higher internal validity.

Replicable – due to the researcher’s high levels of control, research procedures can be repeated so that the reliability of results can be checked.

Limitations

Lacks ecological validity – due to the involvement of the researcher in manipulating and controlling variables, findings cannot be easily generalised to other (real life) settings, resulting in poor external validity.

Field Experiments

These are carried out in a natural setting, in which the researcher manipulates something (I.V.) to see the effect of this on something else (D.V.).

Validity – field experiments have some degree of control but also are conducted in a natural environment, so can be seen to have reasonable internal and external validity.

Less control than lab experiments and therefore extraneous variables are more likely to distort findings and so internal validity is likely to be lower.

Natural / Quasi Experiments

These are typically carried out in a natural setting, in which the researcher measures the effect of something which is to see the effect of this on something else (D.V.). Note that in this case there is no deliberate manipulation of a variable; this already naturally changing, which means the research is merely measuring the effect of something that is already happening.

High ecological validity – due to the lack of involvement of the researcher; variables are naturally occurring so findings can be easily generalised to other (real life) settings, resulting in high external validity.

Lack of control – natural experiments have no control over the environment & other extraneous variables which means that the researcher cannot always accurately assess the effects of the I.V, so it has low internal validity.

Not replicable – due to the researcher’s lack of control, research procedures cannot be repeated so that the reliability of results cannot be checked.

- Laboratory Experiment

- Field experiment

- Quasi Experiment

- Natural Experiment

- Field experiments

You might also like

Field experiments, laboratory experiments, natural experiments, control of extraneous variables, similarities and differences between classical and operant conditioning, learning approaches - social learning theory, differences between behaviourism and social learning theory, research methods in the social learning theory, our subjects.

- › Criminology

- › Economics

- › Geography

- › Health & Social Care

- › Psychology

- › Sociology

- › Teaching & learning resources

- › Student revision workshops

- › Online student courses

- › CPD for teachers

- › Livestreams

- › Teaching jobs

Boston House, 214 High Street, Boston Spa, West Yorkshire, LS23 6AD Tel: 01937 848885

- › Contact us

- › Terms of use

- › Privacy & cookies

© 2002-2024 Tutor2u Limited. Company Reg no: 04489574. VAT reg no 816865400.

7 Important Methods in Psychology With Examples

Psychology is a scientific study of the human mind, mental processes, and behavior. It is called a scientific study because psychologists also do various systematic research and experiments to study and formulate psychological theories like other scientists. Psychological researches involve understanding complex mental processes, human behavior, and collecting different types of data (physiological, psychological, physical, and demographic data), psychologists use various research methods as it is difficult to obtain accurate and reliable results if we use a single research method for collecting research data. The type of method they use depends upon the type of research. Broadly, researches are divide into two types, i.e., experimental and non-experimental researches. Experimental researches involve two or more variables, and it studies the effect of the independent variable on the dependent variable (cause-effect relationship), whereas non-experimental researches do not involve the manipulations of variables. The concept of variables is briefly explained further in this article. Let’s get familiar with some widely used methods of collecting psychology research data.

1. Experimental Method

To understand the experimental method, firstly we need to be familiar with the term ‘variable.’ A variable is an event or stimulus that varies, and its values can be measured. It is to be noted that we can not regard any object as a variable; in fact, the attributes related to that object are called variables. For example, A person is not a variable, but the height of the person is a variable because different people may have different heights. In the experiment method of data collection, we mainly concern with two types of variables, i.e., independent variables and dependent variables. If the value of the variable is manipulated by the researcher to observe its effects, then it is called the independent variable, and the variable that is affected by the change in the independent variable is called the dependent variable. For example, if we want to study the influence of alcohol on the reaction time and driving abilities of the driver, then the amount of alcohol that the driver consumes is the independent variable, and the driving performance of the driver is called the dependent variable. Experimental methods are conducted to establish the relationship between the independent variable (cause) and dependent variables (effect). The experiments are conducted very carefully, and any variables other than the independent variable are kept constant or negligible so that an accurate relationship between the cause and effect can be established. In the above example, other factors like the driver’s stress, anxiety, or mood (extraneous variables) can interfere with the dependent variable (driving ability). It is difficult to avoid these extraneous variables; extraneous variables are the undesired variables that are not studied under the experiments, and their manipulation can alter the results of the study, but we should always try to make them constant or negligible for accurate results.

Control Group and Experimental Group